Custom Connectors for Confluent Cloud Limitations and Support¶

This page describes the limitations and support for custom connectors. Be sure to review all the information in this page before proceeding with the quick start.

Limitations¶

The following are limitations for custom connectors:

- You can only create custom connectors in selected Amazon Web Services (AWS) and Microsoft Azure regions supported by Confluent Cloud.

- The cluster must use public internet endpoints. Currently, there is no support for using a set of public egress IP addresses.

- Custom Connect creates three or four role bindings when you provision a custom connector. These provide the connector with permissions required to operate. These role bindings count toward your Confluent Cloud organization role binding quota.

- You can only run Java-based Apache Kafka® custom connectors in Confluent Cloud.

- The custom connector framework currently supports JDK 11 only.

- Organizations are limited to 30 custom connectors and 100 plugins.

- Up to 5 tasks are supported per custom connector.

- Custom connectors cannot write data to a local file system in Confluent Cloud. If you configure your custom connector to write to the local file system, it will fail.

- Adhere to the connector naming conventions:

- Do not exceed 64 characters.

- A connector name can contain Unicode letters, numbers, marks, and the

following special characters:

. , & _ + | [] -. Note that you can use spaces, but using dashes (-) makes it easier to reference the cluster in a programmatic way.

Important

Confluent regularly scans connectors uploaded through the Custom Connector product to detect if uploaded connectors are interacting with malicious endpoints or otherwise present a security risk to Confluent. If malicious activity is detected, Confluent may immediately delete the connector.

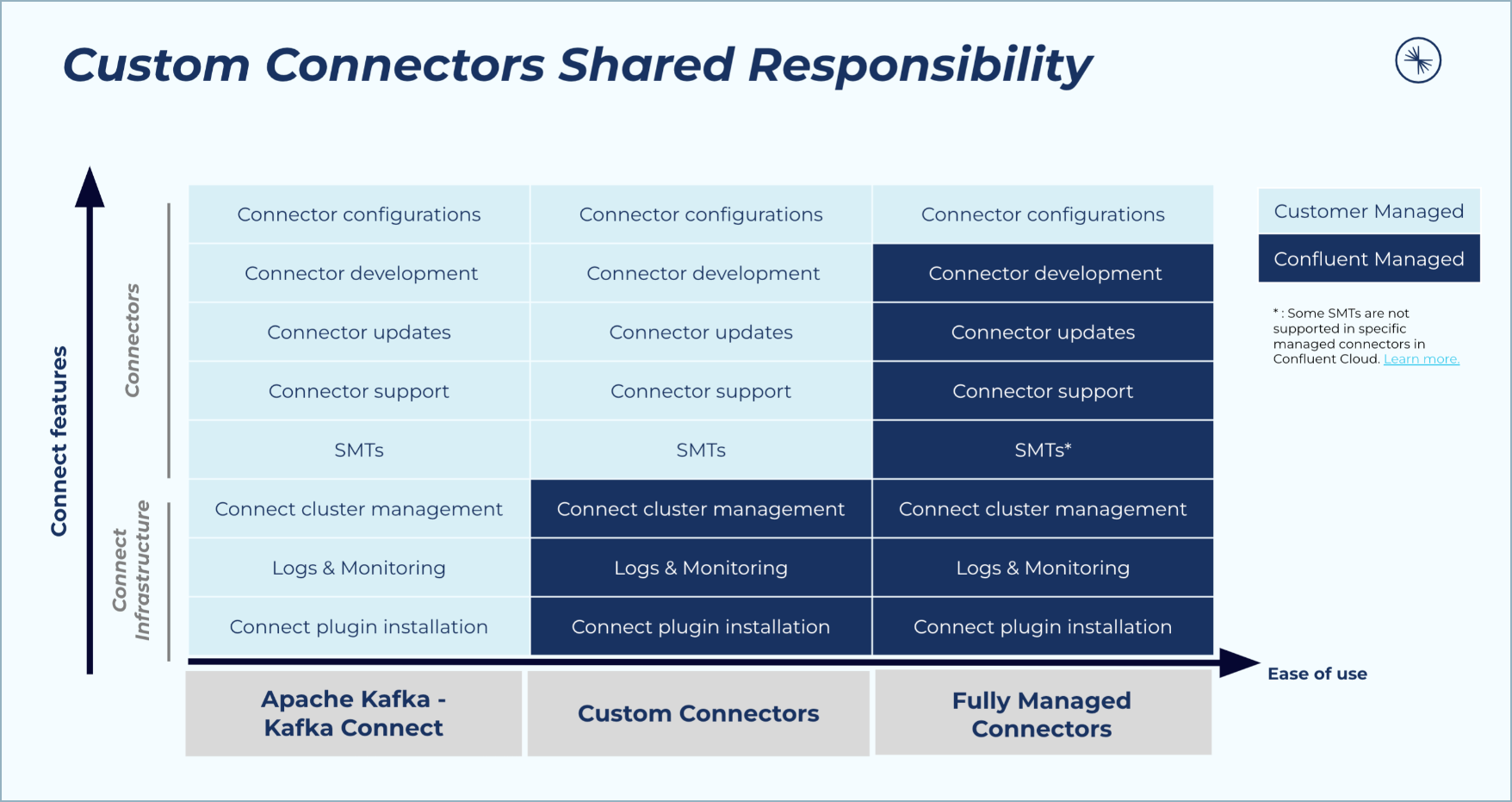

Confluent and Partner support¶

You are responsible for the connectors you upload through the Custom Connector feature.

- Customers that upload connectors to Confluent Cloud through the Custom Connector feature are responsible for management and support of the connector. Confluent does not provide support for custom connectors.

- Partner-built connectors that are published on Confluent Hub may not be tested or certified for Custom Connector functionality on Confluent Cloud. Confluent does not provide Enterprise support for customers who choose to provision a custom connector using a Partner-built connector that is not certified by Confluent.

- Confluent-built connectors are not tested or certified for Custom Connector functionality in Confluent Cloud. Confluent does not provide Enterprise support for customers who choose to provision a custom connector using a Confluent-built plugin.

The following table provides additional details about enterprise support.

Enterprise Support for Custom Connectors

| Connector Type | Confluent Cloud Infrastructure Support | Confluent Connector Support |

|---|---|---|

| Customer-built plug-in/connector | Confluent | Not supported by Confluent. |

| Partner-built plug-in/connector | Confluent | See Certified Partner-built connectors. |

| Confluent-built plug-in/connector | Confluent | Not supported by Confluent. When used for Custom Connectors, Confluent-built plugins and connectors (proprietary and community) are not supported by Confluent. |

Certified Partner-built connectors¶

The Connect plugins below are tested and supported by the listed Confluent Partner.

Certified Partner Connectors

| Confluent Partner | Confluent Hub Link | Documentation and Support |

|---|---|---|

| Ably | Ably Kafka Sink connector | Ably docs and support |

| ClickHouse | ClickHouse Kafka Sink connector | ClickHouse docs and support |

| Neo4j | Neo4j Kafka Sink and Source connectors | Neo4j docs and support |

Supported AWS and Azure regions¶

You can create custom connectors in the following AWS and Azure regions supported by Confluent Cloud.

AWS regions¶

- Africa:

- af-south-1 (Cape Town)

- Asia Pacific:

- ap-east-1 (Hong Kong)

- ap-northeast-1 (Tokyo)

- ap-northeast-2 (Seoul)

- ap-northeast-3 (Osaka)

- ap-south-1 (Mumbai)

- ap-south-2 (Hyderabad)

- ap-southeast-1 (Singapore)

- ap-southeast-2 (Sydney)

- ap-southeast-3 (Jakarta)

- ap-southeast-4 (Melbourne)

- Canada:

- ca-central-1 (Canada Central)

- Europe:

- eu-central-1 (Frankfurt)

- eu-central-2 (Zurich)

- eu-north-1 (Stockholm)

- eu-south-1 (Milan)

- eu-south-2 (Spain)

- eu-west-1 (Ireland)

- eu-west-2 (London)

- eu-west-3 (Paris)

- Middle East:

- me-south-1 (Bahrain)

- me-central-1 (UAE)

- South America:

- sa-east-1 (São Paulo)

- United States:

- us-east-1 (N. Virginia)

- us-east-2 (Ohio)

- us-west-2 (Oregon)

Azure regions¶

- Africa:

- southafricanorth (Johannesburg)

- Asia Pacific:

- australiaeast (New South Wales)

- centralindia (Pune)

- japaneast (Japan East)

- koreacentral (Seoul)

- southeastasia (Singapore)

- Canada:

- canadacentral (Canada)

- Dubai:

- uaenorth (Dubai)

- Europe:

- francecentral (France)

- germanywestcentral (Germany West Central)

- northeurope (Ireland)

- norwayeast (Oslo)

- swedencentral (Gävle)

- switzerlandnorth (Zurich)

- uksouth (London)

- westeurope (Netherlands)

- South America:

- brazilsouth (Brazil South)

- United States:

- centralus (Iowa)

- eastus (Virginia)

- eastus2 (Virginia)

- southcentralus (South Central US)

- westus2 (Washington)

- westus3 (Phoenix)

Schema Registry integration¶

Schema Registry must be enabled for the environment, for the custom connector to use a Schema Registry-based format (for example, Avro, JSON_SR (JSON Schema), or Protobuf). Schema Registry can be enabled as a fully-managed service in Confluent Cloud or as a self-managed service.

Managed Schema Registry¶

When Schema Registry is enabled as a fully-managed service for your Confluent Cloud environment, you can automatically add the Schema Registry configuration properties to your custom connector using the UI. The following example shows the required configuration properties added to the custom connector configuration when using the Avro data format for both the key and value:

{

"confluent.custom.schema.registry.auto": "true",

"key.converter": "io.confluent.connect.avro.AvroConverter",

"value.converter": "io.confluent.connect.avro.AvroConverter"

}

Self-managed Schema Registry¶

If you plan to use a self-managed Schema Registry configuration, the self-managed Schema Registry instance must be accessible over the public internet. You also must add all Schema Registry configuration entries, including credentials, in the connector configuration. For example:

{

"key.converter": "io.confluent.connect.protobuf.ProtobufConverter",

"key.converter.basic.auth.credentials.source": "USER_INFO",

"key.converter.basic.auth.user.info": "<username>:<password>",

"key.converter.schema.registry.url": "https://defgh-ijk.us-west-2.aws.devel.cc.cloud",

"value.converter": "io.confluent.connect.protobuf.ProtobufConverter",

"value.converter.basic.auth.credentials.source": "USER_INFO",

"value.converter.basic.auth.user.info": "<username>:<password>",

"value.converter.schema.registry.url": "https://defgh-ijk.us-west-2.aws.devel.cc.cloud"

}

App log topic¶

Note the following usage details for the app log topic:

- Customers are responsible for all charges related to using the app log topic with a custom connector. For all other billing details, see Manage Billing in Confluent Cloud.

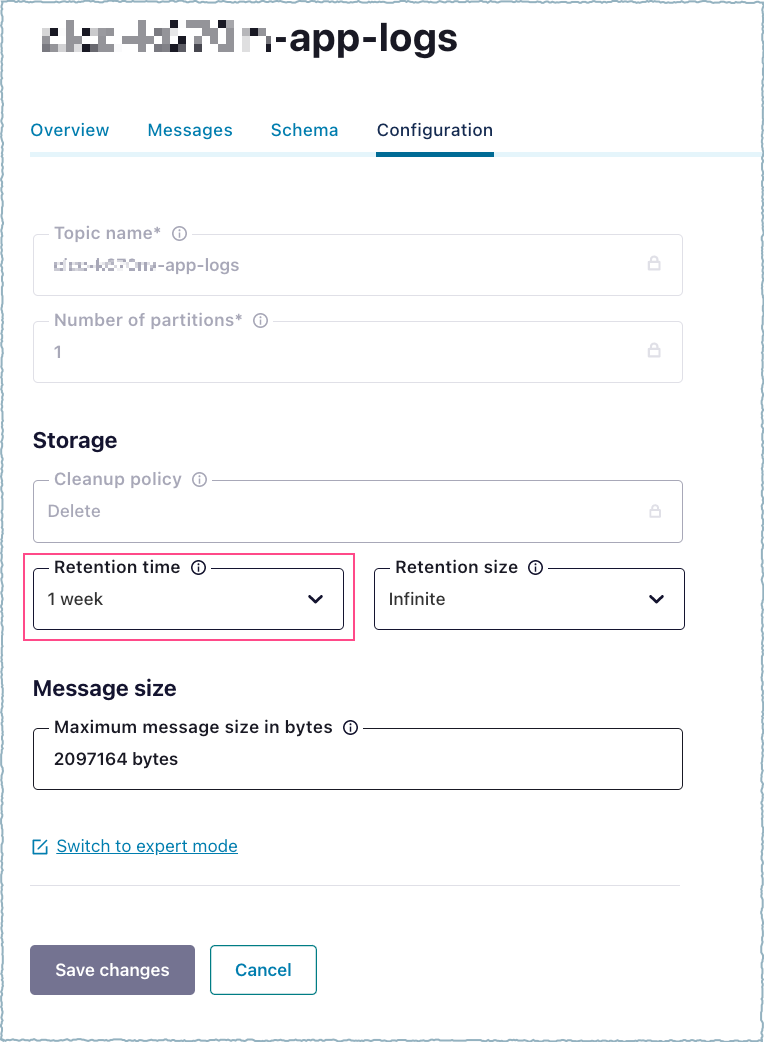

- Kafka topics have a default retention period of seven days. You must consume the logs from the app log topic within seven days. If you need logs for a longer period, change the log retention period configuration property. In the UI, open the appropriate log topic and change the Retention time.

Log retention period¶