Migrate data with Cluster Linking on Confluent Cloud¶

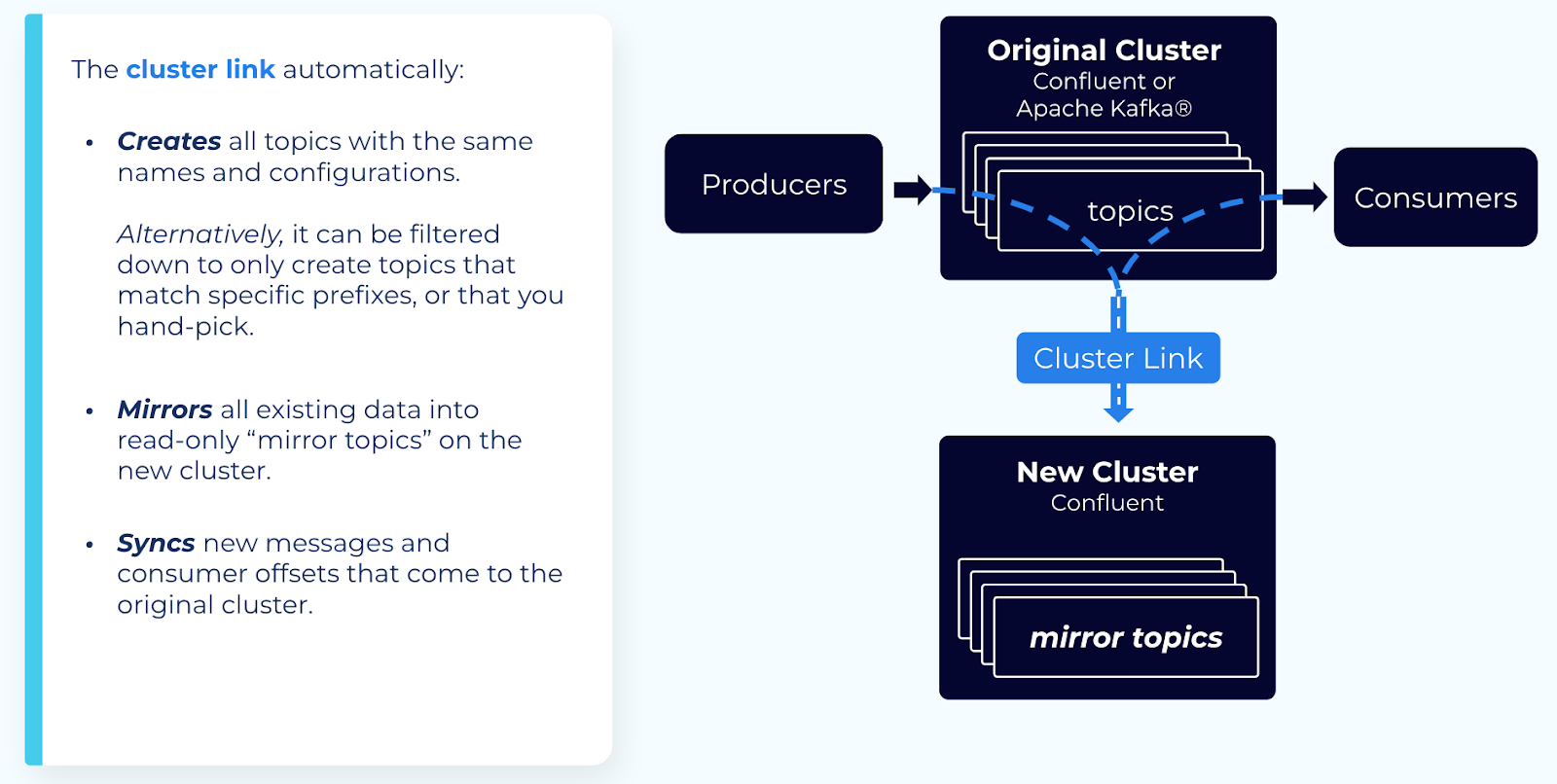

When migrating data from an old cluster to a new cluster, Cluster Linking makes an identical copy of your topics on the new cluster, so it’s easy to make the move with low downtime and no data loss.

Capabilities¶

Cluster Linking can:

- Automatically create matching “mirror” topics with the same configurations, so you don’t have to recreate your topics by hand

- Sync all historical data and new data from the existing topics to the new mirror topics

- Sync your consumer offsets, so your consumers can pick up exactly where they left off, without missing any messages or consuming any duplicates

- Move consumers from the old cluster to the new cluster independently

- Move producers from the old cluster to the new cluster topic-by-topic

Success stories¶

Read about successful migrations with Cluster Linking:

- In this technical presentation at Current 2022, SAS described their zero-downtime migration using Cluster Linking Zero Down Time Move From Apache Kafka to Confluent (The two Cluster Linking limitations that Justin mentions have both been addressed and resolved.)

- New Kafka Tier, No Kafka Tears, published in Maker Stories by Wealthsimple, describes using Cluster Linking for migration to scale up existing Kafka systems

- SAS Powers Instant, Real-Time Omnichannel Marketing at Massive Scale with Confluent’s Hybrid Capabilities details SAS’s success in migrating large Kafka clusters with Cluster Linking.

- Namely describes their data migration projects in “Everywhere: Cloud Cluster Linking” under the subtopic Simplify geo-replication and multi-cloud data movement with Cluster Linking

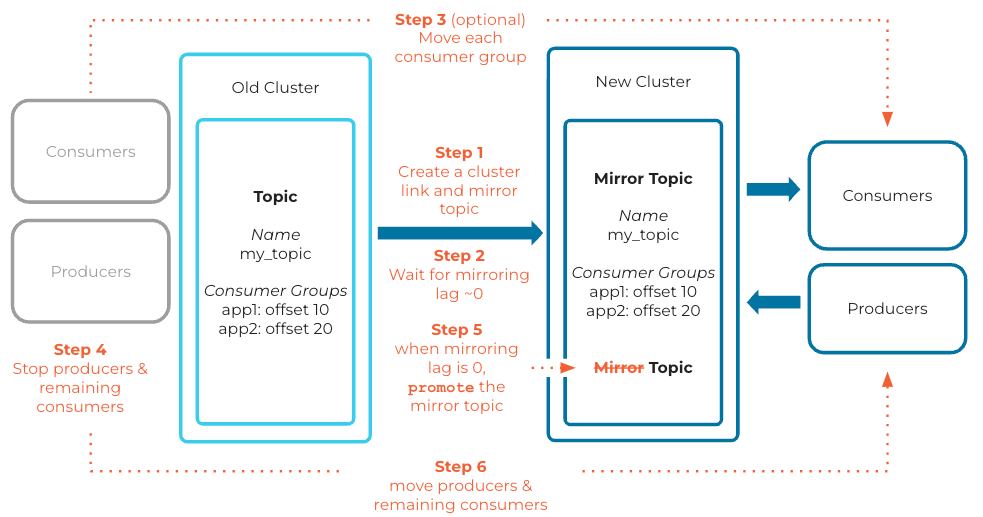

Standard migration with Cluster Linking¶

The sections below describe the general steps to migrate data from one cluster to another using Cluster Linking.

Step 1: Create a cluster link across two clusters¶

Create a cluster link with the following configurations:

Enable auto-create mirror topics.

This configures Cluster Linking to automatically create mirror topics on your new cluster for any existing topics on your old cluster. You can filter by specific prefixes, if needed.

Alternatively, you can create individual mirror topics by CLI or REST API after you’ve created the cluster link.

Enable

consumer offset sync(see consumer offset configs in Configuring cluster link behavior).This syncs your consumer offsets from your old cluster to your new cluster. You can filter by specific consumer group names or prefixes, if needed.

By default, this sync happens every 30 seconds. You can set it as low as 1 second, to minimize consumer downtime when switching from the old cluster to the new cluster. Consumer offsets are part of the data that your cluster link mirrors, so syncing them more frequently comes at the cost of higher data throughput. You can monitor your total data throughput in Confluent Cloud using the metrics shown under Mirroring Throughput in Metrics and Monitoring.

If migrating between two Confluent Cloud clusters, or two Confluent Platform / Apache Kafka® clusters with the same security system, enable ACL sync You can filter by specific resources, principals, and so on, if needed.

Note

- ACL sync: ACL syncing is not helpful when migrating to Confluent Cloud from a different platform because Confluent Cloud uses its own authentication system.

- Schema Linking: To use a mirror topic that has a schema with Confluent Cloud Connect, ksqlDB, broker-side schema ID validation, or the topic viewer, make sure that Schema Linking puts the schema in the default context of the Confluent Cloud Schema Registry. To learn more, see How Schemas work with Mirror Topics.

Step 2: Wait for mirroring lag to approach zero (0)¶

When mirroring lag is almost zero (0), this means that the existing data in your topics has been mirrored to your new cluster.

This allows you to switch your consumers and producers with minimal downtime.

If you want certain topics to be ready before others, you can prioritize those topics by pausing mirroring on the other topics. That way, more throughput will be allocated to the topics you prioritize.

If your cluster link is having trouble keeping up with the incoming data and is not able to get mirroring lag near 0, you may need to prioritize certain topics by pausing mirroring on the other topics.

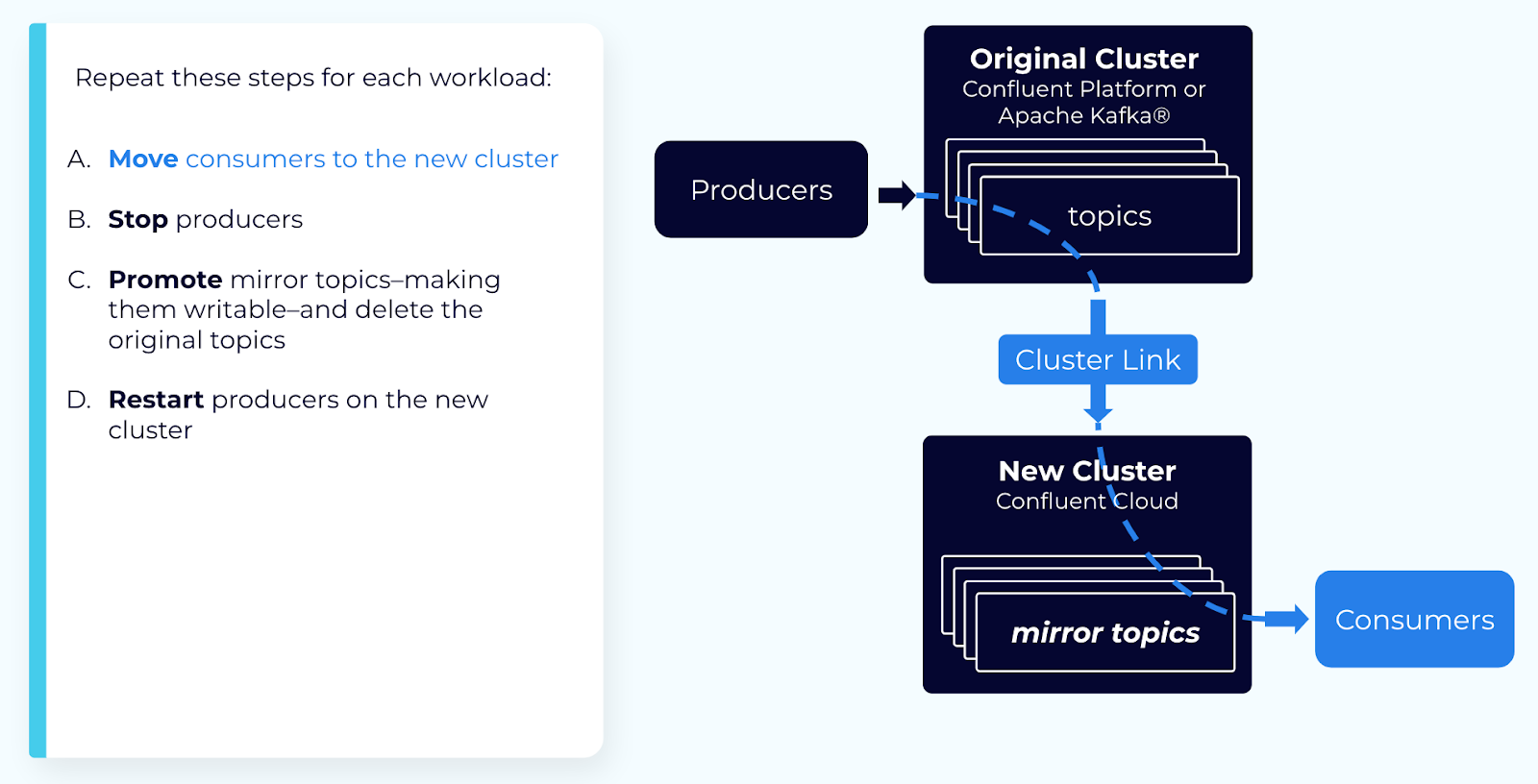

(Optional) Step 3: Move consumer groups from the old cluster to the new cluster¶

You can move each consumer group independently, if you wish. Because consumer offsets are synced, consumers will pick up from the same spot where they left off. To move a consumer group, follow these steps:

Stop the consumer group on the old cluster.

Wait for at least

consumer.offset.sync.ms(default is 30 seconds) to ensure its latest offsets have been synced.Exclude that consumer group’s name from the cluster link, in the

consumer.offset.group.filterssetting.Verify that the topic offsets the consumer group is at have been synced to the mirror topic.

You can do this by checking that consumer lag > mirroring lag.

Before you start the consumer on the new cluster, you need to ensure that the offsets at which the consumer is at have been mirrored to the mirror topic. If the consumer is ahead of the mirroring, then its offsets will be reset to the latest offsets in the topic, and it will consume duplicates.

For example, if the consumer is at offset 100 for a partition, but you start the consumer when the mirror topic is only at offset 90, then the consumer will start consuming from the end of the topic, and will re-consume messages 90-100.

Restart the consumer group on the new cluster.

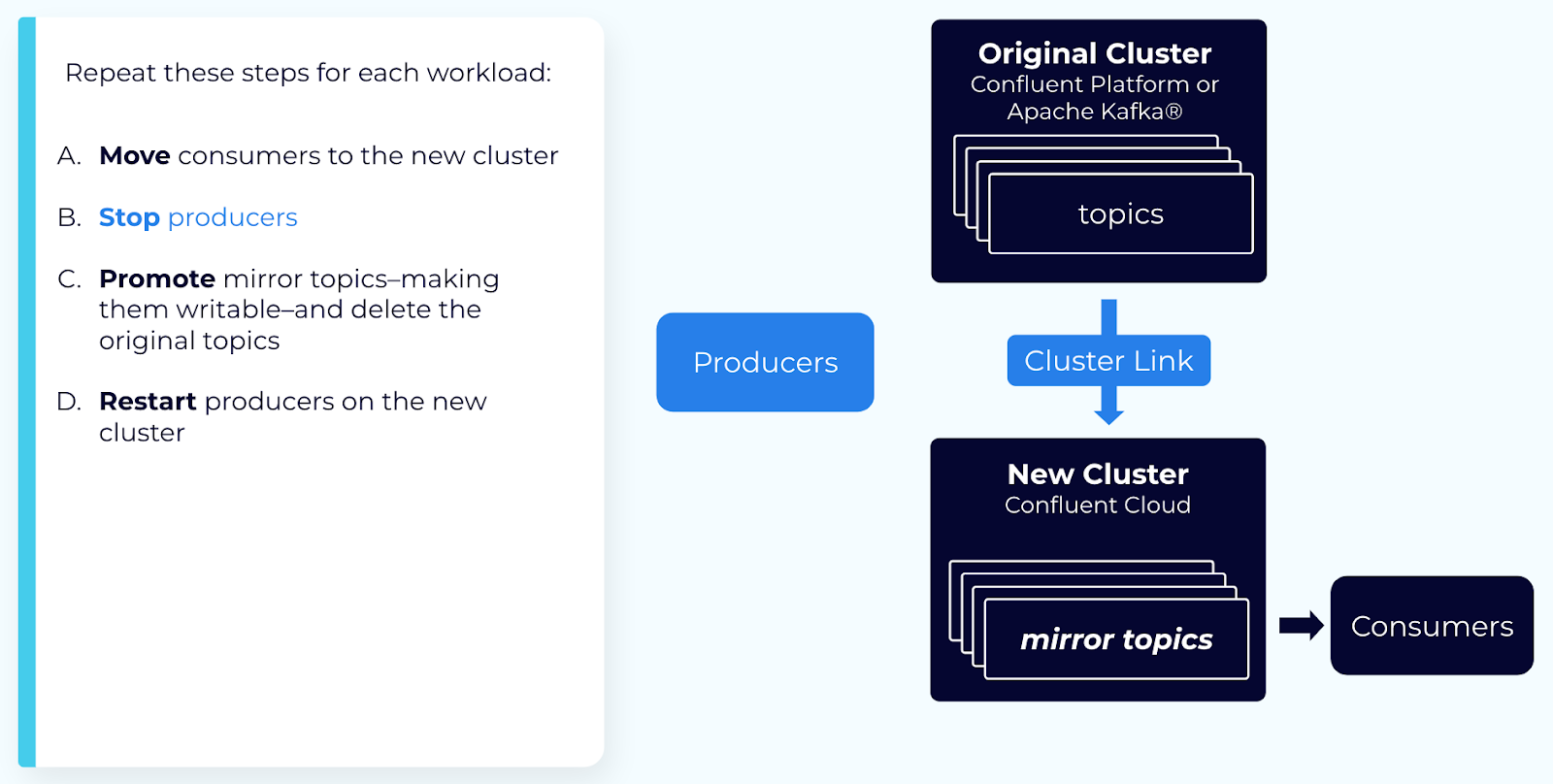

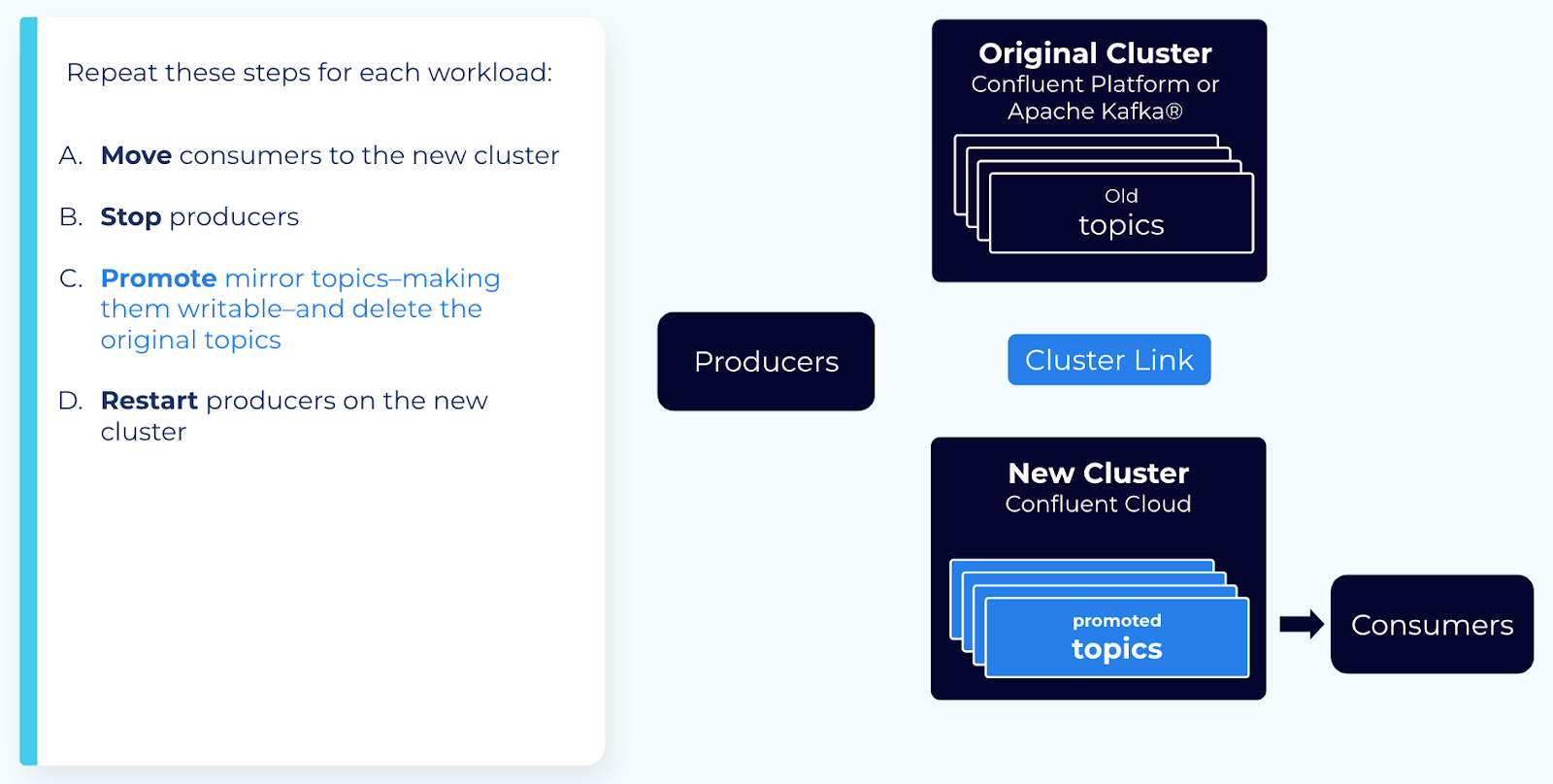

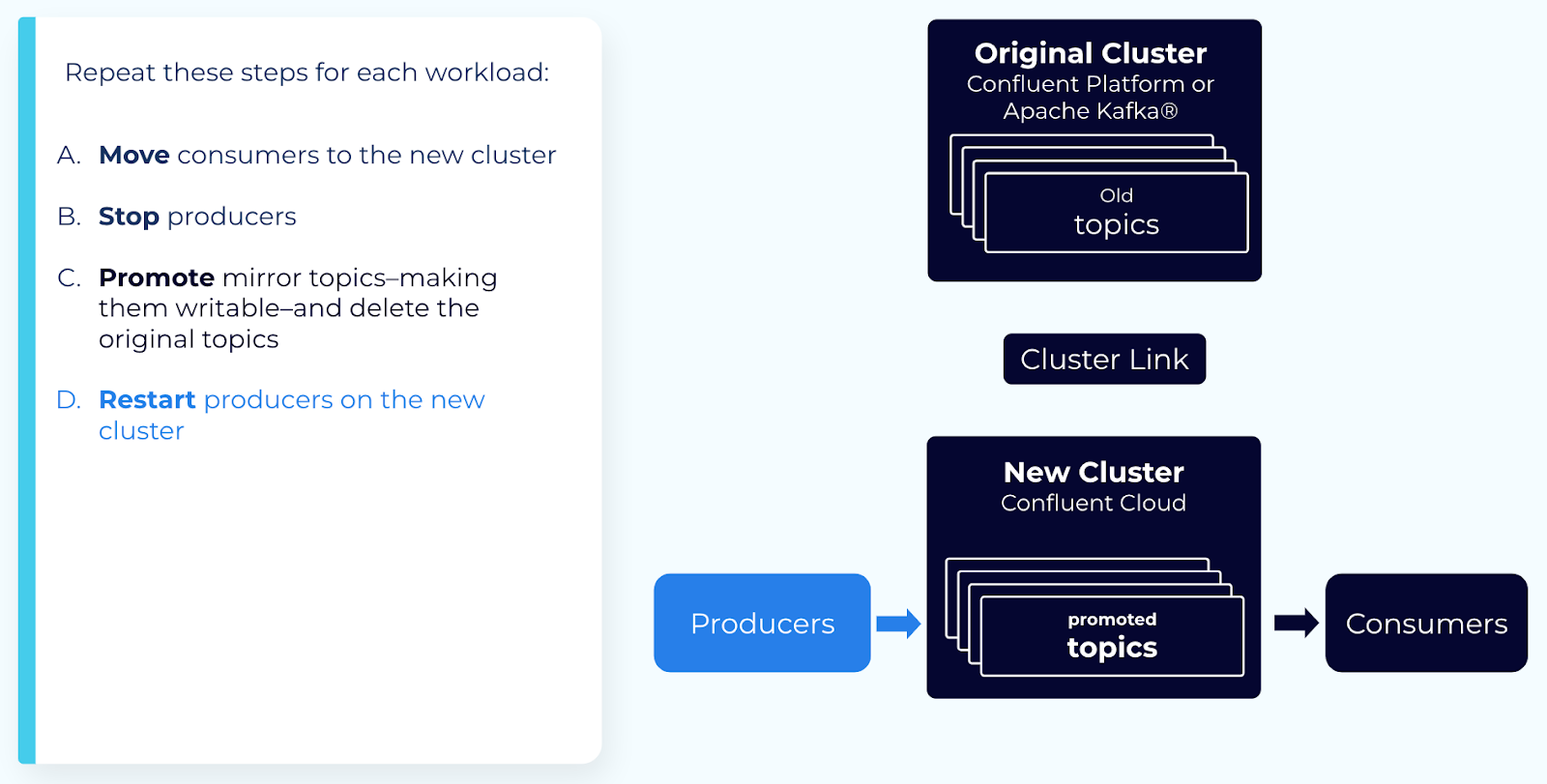

Step 4: Stop producers and consumers¶

Stop all producers and any remaining consumers. This gives the cluster link a chance to “catch up,” without new messages coming in.

Step 5: Promote the mirror topic¶

Important

- When deleting a cluster link, first check that all mirror topics are in the

STOPPEDstate. If any are in thePENDING_STOPPEDstate, deleting a cluster link can cause irrecoverable errors on those mirror topics due to a temporary limitation. - Make sure that you’ve stopped all producers and consumers on the source topic on your original cluster. After you call promote, mirroring and consumer offset sync stop permanently. After promote, if any producers produce messages to the source topic on the original cluster, those messages will not be migrated. After promote, if any consumers consume messages from the source topic on the original cluster, their offsets will not be synced, and they will consume duplicates when they move to the new cluster.

When mirroring lag is near 0, call the promote Cluster Linking API on the mirror topic.

This will convert the mirror topic into a normal, writable topic.

You must wait for mirroring lag to go to zero (0) to ensure all messages have been replicated to your new cluster.

The promote command checks to make sure the topic is not lagging before succeeding, but you should also check the mirroring lag metric.

Step 6: Restart producers and consumers¶

Wait for the promotion to complete and the mirror topics to enter the STOPPED

state. Mirror topic state can be found in the REST API or CLI by describing an

individual mirror topic or all mirror topics on a cluster link. In the Confluent Cloud Console,

a STOPPED mirror topic will appear as a regular topic, and no

longer be displayed as a mirror topic.

Once mirror topics are in the STOPPED state, you can restart producers and consumers to them on the new cluster.

Caution

Producing to a mirror topic that is still in the PENDING_STOPPED state can cause messages to fail;

consuming from a mirror topic that is still in the PENDING_STOPPED state can cause the consumer to consume duplicate messages.

You have now moved your topics, producers, and consumers to a new cluster.

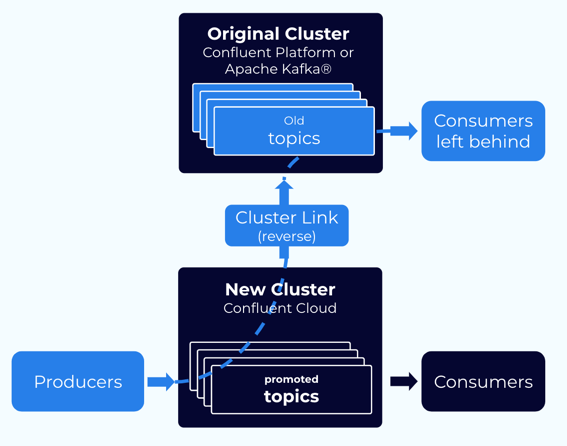

Step 7: Extra steps when leaving some consumers on the original cluster¶

If you want to leave some of your consumer groups on your original cluster, you must take some extra steps. You will need to get mirroring flowing in the reverse direction: from your new cluster back to your original cluster.

Note

- A given consumer group should only consume from one cluster. A consumer group cannot be “stretched” between two clusters.

- This strategy is only available for Confluent clusters, not for Apache Kafka® clusters, as Cluster Linking cannot move data to an Apache Kafka® cluster.

- Make sure that the cluster link you used for migration was mirroring these consumer group offsets too; even though the consumers won’t move. The consumer offsets will temporarily be on the new cluster.

- Make sure that your consumer group(s) have stopped consuming (in step 4, above).

- Delete the original topics on the original cluster.

- Create a cluster link in the reverse direction: from the new cluster to the original cluster. Have this cluster link sync the consumer offsets for these consumer groups. This will move the consumer offsets back to the original cluster.

- Create mirror topic(s) on the original cluster. This will start data flowing from the new cluster to the original cluster.

- Once the mirror topic has mirrored up to the offsets where the consumer group(s) were, exclude those consumer group(s) from the cluster link’s consumer offset sync. This will stop the cluster link from syncing their consumer offsets.

- Restart the consumer group(s) on the original cluster. (See the caveats in step 3, above.)

Using an Apache Kafka® or Confluent Platform source cluster¶

To use an Apache Kafka® or Confluent Platform source cluster, your source cluster must be either:

- Accessible through public IP addresses for Confluent Cloud to reach out to, with the following requirements:

- You will need to know your source cluster’s bootstrap server and cluster ID in order to use it.

You can find the source cluster ID with the command-line tool

kafka-cluster cluster-idthat comes with Confluent Platform and Apache Kafka®. Your cluster’s administrator will know its bootstrap server. - If your source cluster is set up with security, you will need to provide security credentials to your cluster link’s configuration, as described in Configuring security credentials for Confluent Platform and Kafka source clusters.

- You will need to know your source cluster’s bootstrap server and cluster ID in order to use it.

You can find the source cluster ID with the command-line tool

Or,

- Running Confluent Platform 7.1+, using source-initiated cluster links, and have access to the Confluent Cloud destination cluster. To learn more about this configuration, see Link Self-Managed and Confluent Cloud Clusters for Hybrid Cloud and Bridge-to-Cloud Deployments.

Alternate migration strategies¶

If you cannot move all of your producers for a given topic(s) at the same time, you can consider two alternate approaches. Both involve more hands-on work than the standard migration approach with Cluster Linking.

Repartitioning and renaming topics in a migration¶

Cluster Linking preserves the same number of partitions on any topics it mirrors. It also keeps the topic name the same, though you can optionally add a prefix before the name.

Here are the several options for migrations that need partition changes or name changes for topics being migrated:

(Recommended Approach) Use Cluster Linking to mirror the topics byte-for-byte to the new cluster. Then, use Confluent Cloud ksqlDB or Confluent Cloud for Flink SQL to repartition each of the topics into your desired number of partitions. The advantage of this approach is that both of these tools are fully managed and API-driven. Keep these points in mind:

- If you have many topics, you may want to do this in batches.

- If you want to change the topic name after the migration, ksqlDB can do that.

- If you want to keep the topic name the same after the migration, then have the cluster link add a prefix to the topic names, and have ksqlDB create a repartitioned topic using the original name.

To use Flink SQL, construct the query based on one of these two scenarios:

You want to repartition from source into sink, and you already created the sink (with the desired number of partitions). When that’s the case, the query is:

INSERT INTO sink SELECT * FROM source;

You want to repartition from source to sink, but have not yet created the sink with the desired number of partitions. The query then becomes:

CREATE TABLE sink_with_12_partitions DISTRIBUTED INTO 12 BUCKETS AS SELECT * FROM source;

(Alternate Approach) Deploy Confluent Replicator for the migration. Replicator can inherently change the number of partitions and/or the names of the topics that you want to replicate. Keep in mind that Replicator is not a fully-managed SaaS service. It is software that you must deploy, manage, and monitor across multiple nodes and VMs that you own, or on a Kubernetes cluster using Confluent for Kubernetes. This is a large investment that takes more effort and Kafka expertise than setting up Cluster Linking.

In either case, you will need to develop a custom strategy for moving consumer groups from the old cluster to the new cluster. None of these technologies — Cluster Linking, ksqlDB, or Replicator — can correctly translate consumer offsets for you, since the partitions are changing and thus the offsets are not consistent.

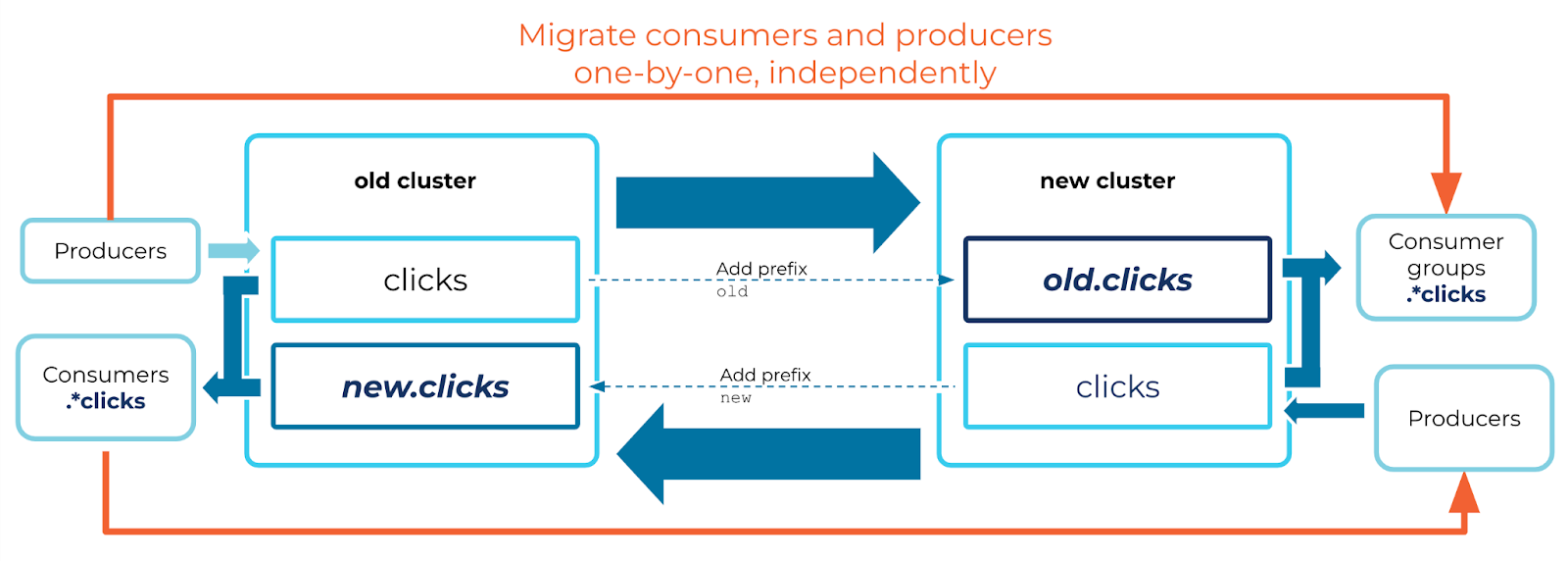

Bidirectional with Cluster Linking¶

Mirror topics are read-only. Therefore, if you migrate some producers but not others, you cannot have those producers writing to the mirror topic on the new cluster. You’ll need a different topic to write these events to, and you’ll need to sync those events back to your old cluster for any consumers that haven’t moved yet. You’ll also need to make some changes to your consumers to make sure they get the events produced to both clusters.

For a given topic, you can set up three new topics:

A new, regular topic on your new cluster by the same name. This topic will receive new events produced to your new cluster.

A mirror topic on your new cluster, which mirrors historical data and any new events produced to the old cluster. You’ll give this topic a prefix, so it doesn’t clash with the writable topic.

Tip

Prefixing is available in Confluent Cloud as of early Q2 2022.

A mirror topic on your old cluster, which mirrors the writable topic from the new cluster. This brings new events back to your old cluster for straggling consumers.

There are several changes you need to make to your consumers to make this work:

- Your consumers need to consume from a regex pattern–instead of a topic name–that will capture both topics.

For example, if your topic is named

clicks, the consumers could consume from the pattern.*clicksto consume from bothclicksand the prefixed topics. - When moving a consumer group, it will need to manually set its offsets on the new cluster for the writable topic. Because this is moving “upstream,” the cluster link does not sync its consumer offsets from the mirror topic on the old cluster.

- Because your consumers are consuming from two different topics, you cannot rely on the partitions for ordering. A message with a given key will be produced to one partition on the old cluster and a different partition on the new cluster. Two messages with the same key may be read in different order by different consumers. So, your consumers must use something else to determine message order, such as the timestamp.

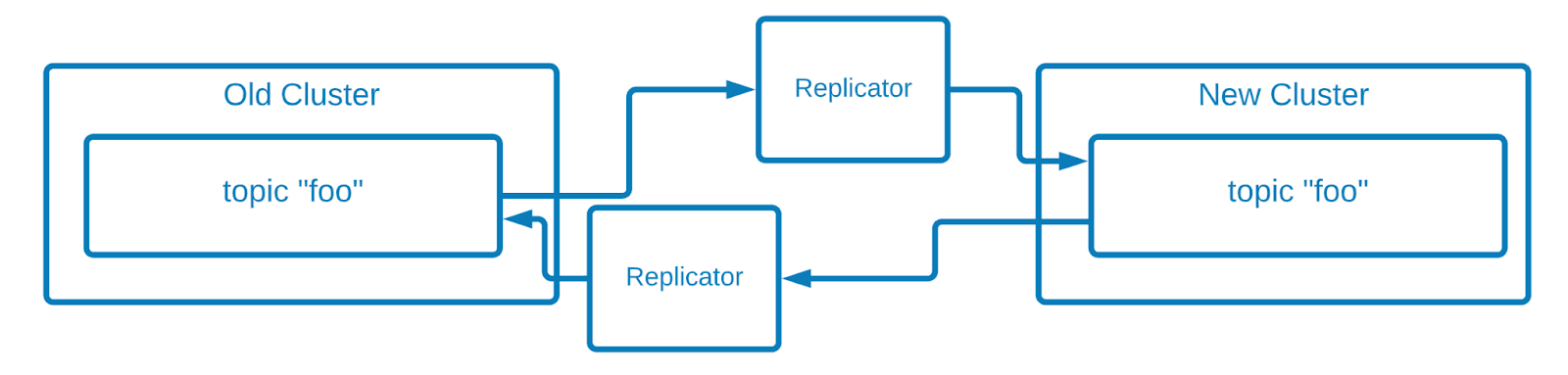

Bidirectional with Replicator¶

Confluent Replicator can be run in VMs or in a Kubernetes cluster. It syncs messages between topics in two different clusters.

You can set up two deployments of Replicator to achieve bi-directional replication. Replicator ensures that no cyclical loops are created; that is, that the same message doesn’t get replicated back to the original cluster where it was produced.

However, the ordering between these two topics will not be the same. That means it is impossible for a consumer to move from the `old` cluster to the `new` cluster and pick up at the same spot where it left off. The consumer must choose to either:

- Rewind to an earlier offset in order to ensure that no messages are missed. However, this will cause the consumer to consume duplicates of some messages. Or,

- Start consuming at the end of the topic, which will cause it to miss the most recently produced messages.