Geo-replication with Cluster Linking on Confluent Cloud¶

The following sections provide an overview of Cluster Linking on Confluent Cloud, including an explanation of what it is and how it works, success stories, supported cluster types, a tutorial, and more.

What is Cluster Linking?¶

Cluster Linking on Confluent Cloud is a fully-managed service for replicating data from one Confluent cluster to another. Programmatically, it creates perfect copies of your topics and keeps data in sync across clusters. Cluster Linking is a powerful geo-replication technology for:

- Multi-cloud and global architectures powered by real-time data in motion

- Data sharing between different teams, lines of business, or organizations

- High Availability (HA)/Disaster Recovery (DR) during an outage of a cloud provider’s region

- Data and workload migration from a Apache Kafka® cluster to Confluent Cloud or Confluent Platform cluster to Confluent Cloud

- Protect Tier 1, customer-facing applications and workloads from disruption by creating a read-replica cluster for lower-priority applications and workloads

- Hybrid cloud architectures that supply real-time data to applications across on-premises datacenters and the cloud

- Syncing data between production environments and staging or development environments

Cluster Linking is fully-managed in Confluent Cloud, so you don’t need to manage or tune data flows. Its usage-based pricing puts multi-cloud and multi-region costs into your control. Cluster Linking reduces operational burden and cloud egress fees, while improving the performance and reliability of your cloud data pipelines.

Important

There are restrictions on Cluster Linking features with regard to Basic, Standard, and Enterprise clusters, Private Networking, and Apache Kafka® Streams and Kafka Transactions.

- For details on capabilities of cluster types, see Kafka Cluster Types in Confluent Cloud, and the Cluster Linking capabilities sections for Basic, Standard, Enterprise, and Dedicated cluster types.

- For a Cluster Linking support matrix per cluster type, see Supported cluster types.

- For supported cluster combinations for Private Networking, see Supported cluster combinations in Manage Private Networking for Cluster Linking on Confluent Cloud.

- For details on limitations with regard to Kafka transactions, security requirements, management, and more, see Limitations.

Success stories¶

In this technical presentation at Current 2022, SAS described their zero-downtime migration using Cluster Linking Zero Down Time Move From Apache Kafka to Confluent (The two Cluster Linking limitations that Justin mentions have both been addressed and resolved.)

Real-Time Inter-Agency Data Sharing With Kafka, shows how Apache Kafka® and Cluster Linking have transformed how government agencies share data: in real-time with faster onboarding of new data sets, real-time event notification, reduced cost for data sharing, and enhanced and enriched data sets for improved data quality.

1-800 Flowers used Cluster Linking for a disaster recovery strategy and for multi-cloud data movement (minute 46 of this webinar).

“In three months, we went from having no DR strategy to a production multi-cloud DR capability based on real-time data architecture that also supported high performance regional applications.”

Confluent Sets Data in Motion Across Hybrid and Multicloud Environments for Real-Time Connectivity Everywhere lists success stories implementing Cluster Linking under the subheading “Cluster Linking: Seamlessly Connect Applications and Data Systems Across Hybrid and Multicloud Architectures”

- Freeman talks about building a hybrid cloud and HA/DR architecture

- SAS talks about completing a large-scale migration

New Kafka Tier, No Kafka Tears, published in Maker Stories by Wealthsimple, describes using Cluster Linking for migration to scale up existing Kafka systems

Namely describes their data migration project in “Everywhere: Cloud Cluster Linking” under the subtopic Simplify geo-replication and multi-cloud data movement with Cluster Linking

SAS used Cluster Linking to migrate to Confluent for Kubernetes and other cloud-native solutions. Read the full story in SAS Powers Instant, Real-Time Omnichannel Marketing at Massive Scale with Confluent’s Hybrid Capabilities, under the subtopic “A much easier migration thanks to Cluster Linking”.

How it works¶

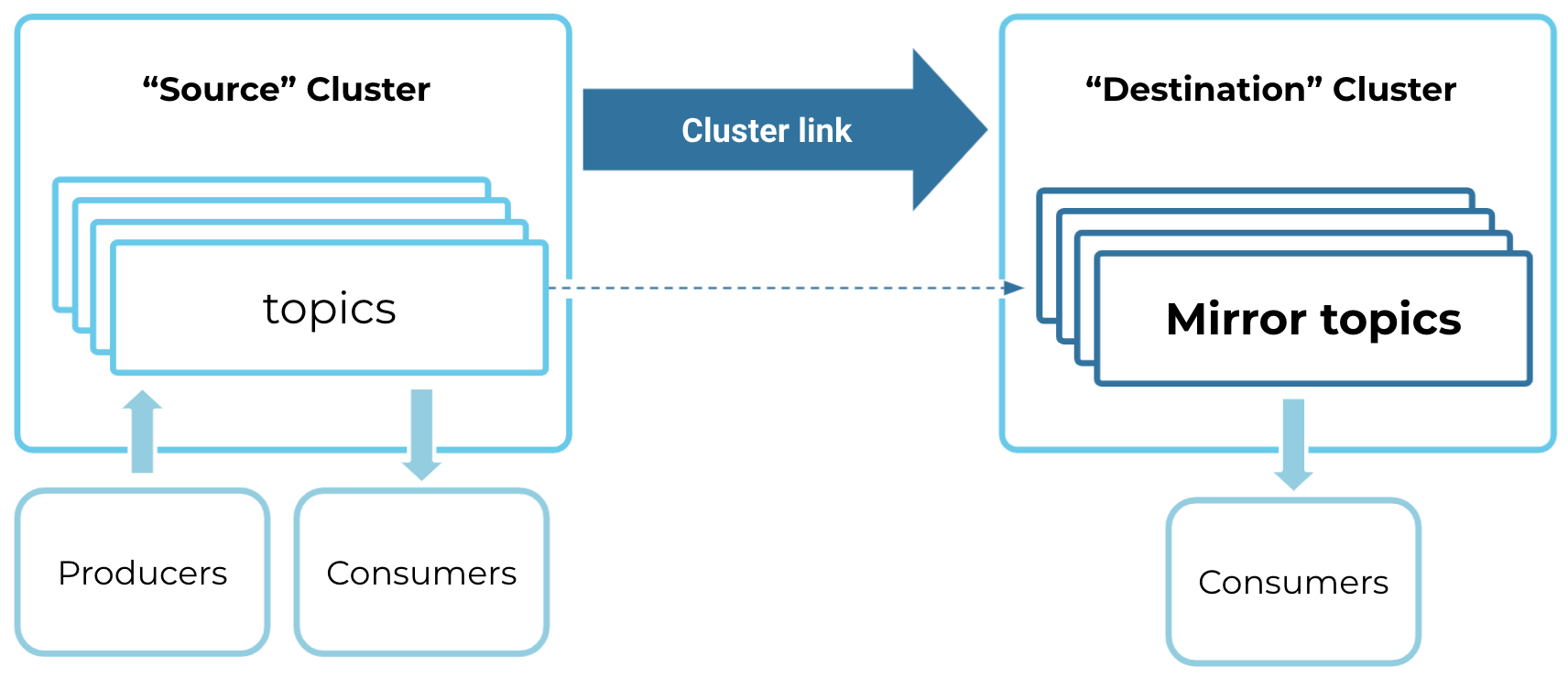

Cluster Linking allows one Confluent cluster to mirror data directly from another. You can establish a cluster link between a source cluster and a destination cluster in a different region, cloud, line of business, or organization. You choose which topics to replicate from the source cluster to the destination. You can even mirror consumer offsets and ACLs, making it straightforward to move Kafka consumers from one cluster to another.

In one command or API call, you can create a cluster link from one cluster to another. A cluster link acts as a persistent bridge between the two clusters.

confluent kafka link create tokyo-sydney

--source-bootstrap-server pkc-867530.ap-northeast-1.aws.confluent.cloud:9092

--source-cluster lkc-42492

--source-api-key AP1K3Y

--source-api-secret ********

Tip

--source-cluster-id was replaced with --source-cluster in version 3 of

confluent CLI,

as described in the command reference for confluent kafka link create.

To mirror data across the cluster link, you create mirror topics on your destination cluster.

confluent kafka mirror create clickstream.tokyo

--link tokyo-sydney

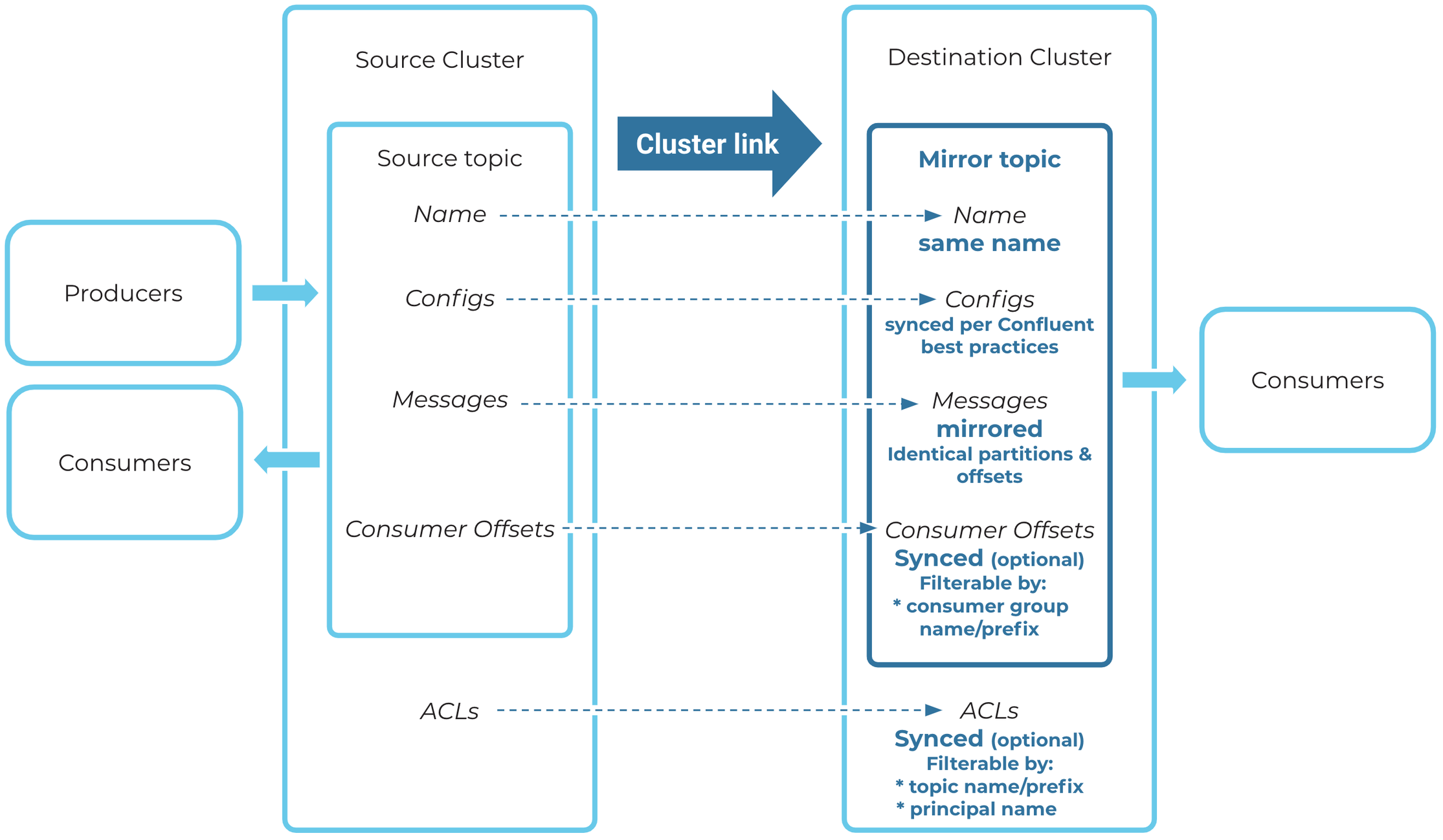

Mirror topics are a special kind of topic: they are read-only copies of their source topic. Any messages produced to the source topic are mirrored to the mirror topic “byte-for-byte,” meaning that the same messages go to the same partition and same offset on the mirror topic. Mirror topics can be consumed from just the same as any other topic.

Cluster links and mirror topics are the building blocks you can use to create scalable, consistent architectures across regions, clouds, teams, and organizations.

Cluster Linking replicates essential metadata.

- Cluster Linking applies the best practice of syncing topic configurations between the source and mirror topics. (Certain configurations are synced, others are not.)

- You can enable consumer offset sync, which will sync consumer offsets from the source topic to the mirror topic (only for mirror topics), and you can filter to specific consumer groups if desired.

- You can enable ACL sync, which will sync all ACLs on the cluster (not just for mirror topics). You can filter based on the topic name or the principal name, as needed.

These features are covered in the various Tutorials.

Tip

Cluster Linking can use egress static IP addresses on AWS destination clusters.

Use cases¶

Confluent provides multi-cloud, multi-region, and hybrid capabilities in Confluent Cloud. Many of these are demonstrated in the Tutorials, as well as in tutorials specific to each use case.

- Global and Multi-Cloud Replication: Move and aggregate real-time data across regions and clouds. By making geo-local reads of real-time data possible, this can act like a content delivery network (CDN) for your Kafka events throughout the public cloud, private cloud, and at the edge.

- Data Sharing - Share data in real-time with other teams, lines-of-business, or organizations.

- Data Migration - Migrate data and workloads from one cluster to another.

- Disaster Recovery and High Availability - Create a disaster recovery cluster, and fail over to it during an outage.

- Tiered Separation of Critical Applications - Protect Tier 1, customer-facing applications and workloads from disruption by creating a read-replica cluster for lower-priority applications and workloads.

Cluster Linking mirroring throughput (the bandwidth used to read data or write data to your cluster) is counted against your Fixed limits and recommended guidelines.

Supported cluster types¶

A cluster link sends data from a “source cluster” to a “destination cluster”. The supported cluster types are shown in the table below.

Unsupported cluster types and other limits are described in Limitations.

| Source Cluster Options | Destination Cluster Options |

|---|---|

| Any Dedicated or Enterprise Confluent Cloud cluster | |

| Dedicated Confluent Cloud cluster or Enterprise cluster with private networking | Dedicated or Enterprise Confluent Cloud cluster under certain networking circumstances, see Manage Private Networking for Cluster Linking on Confluent Cloud. |

| Apache Kafka® 3.0+ or Confluent Platform 7.0+ with public internet IP addresses on all brokers [1] | Any Dedicated or Enterprise Confluent Cloud cluster |

| Kafka 3.0+ or later without public endpoints [1] | A Dedicated Confluent Cloud cluster with VPC Peering or VNet Peering, or Enterprise cluster |

| Confluent Platform 7.1+ (even behind a firewall) | Any Dedicated or Enterprise Confluent Cloud cluster under certain networking circumstances, see Manage Private Networking for Cluster Linking on Confluent Cloud. |

|

Confluent Platform 7.0+ (even behind a firewall) |

- If the source cluster is not a Confluent Cloud cluster, then it must be running running version 2.4 of the

inter.broker.protocol(IBP). - For cluster links using public networking, the source cluster and destination cluster can be in different regions, cloud providers, organizations, or Confluent Cloud environments.

- Cluster links using private networking are now supported across Azure and AWS cloud providers, as described in Supported cluster combinations for private networking. Clusters must be in the same Confluent Cloud organizations and are not supported with Google Cloud private networking.

- Dedicated legacy clusters are not supported as a destination with Cluster Linking. You should should seamlessly migrate to Dedicated clusters to use Cluster Linking.

Footnotes

| [1] | (1, 2) Cluster Linking is supported on all currently supported versions of Confluent Platform and Kafka, as described in Confluent Platform and Apache Kafka compatibility.

The exception to this is for migration use cases from Confluent Platform or Kafka to Confluent Cloud, where the source cluster can be Confluent Platform 5.4.0 or later Or Kafka 2.4.0 or later.

For migration use cases and for versions earlier than Confluent Platform 5.5.0 or Kafka 2.5.0, you must disable the “incremental fetch” request by setting the broker configuration max.incremental.fetch.session.cache.slots=0 and restart all the source brokers. |

How to check the cluster type¶

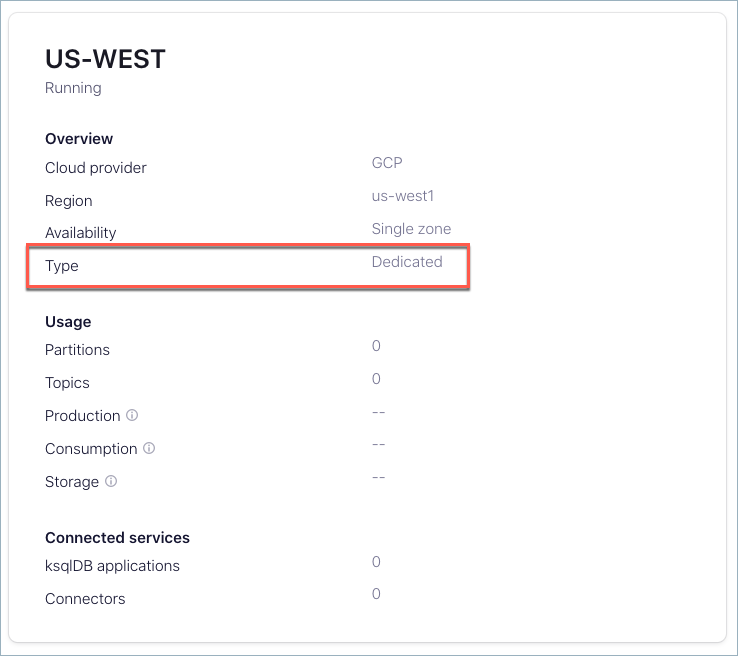

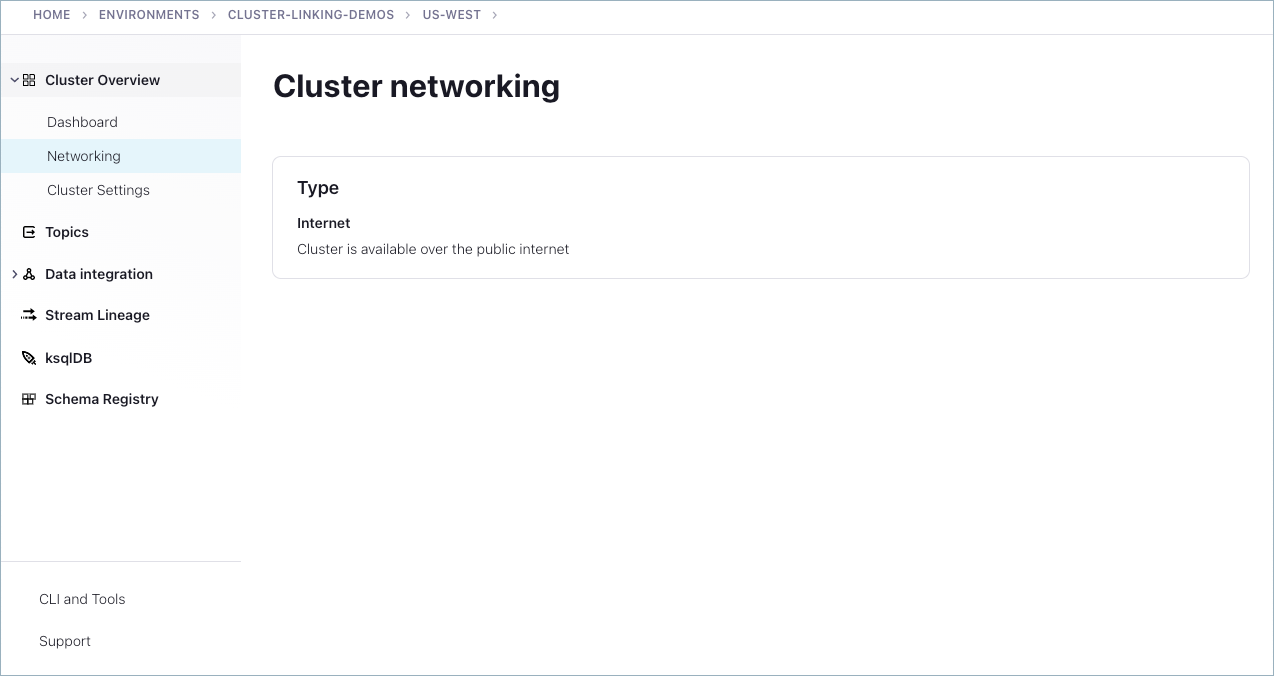

To check a Confluent Cloud cluster’s type and endpoint type:

Log on to Confluent Cloud.

Select an environment.

Select a cluster.

The cluster type is shown on the summary card for the cluster.

Alternatively, click into the cluster, and select Cluster settings from the left menu. The cluster type is shown on the summary card for “Cluster type”.

From the left menu under Cluster overview for a dedicated cluster, click the Networking menu item to view the endpoint type. Only Dedicated clusters have the Networking tab; Basic and Standard clusters always have Internet networking. Networking is defined when you first create the Dedicated cluster.

Support for Cluster Linking from external sources¶

As described in Supported cluster types for Cluster Linking, you can use any Kafka 3.0+ cluster as a source cluster. For example, you can link from an external source such as an Amazon MSK cluster to a Confluent Cloud cluster.

Linking from external sources is detailed in the following scenarios:

Pricing¶

Confluent Cloud clusters that use Cluster Linking are charged based on the number of cluster links and the volume of mirroring throughput to or from the cluster.

For a detailed breakdown of how Cluster Linking is billed, including guidelines for using metrics to track your costs, see Cluster Linking in Confluent Cloud Billing.

More general information regarding prices for Confluent Cloud are on the website on the Confluent Cloud pricing page.

Getting Started¶

Just getting started with Cluster Linking? Here are a few suggestions for next steps.

Tutorials¶

To get started, try one or more tutorial, each of which maps to a use case.

Mirror topics¶

Read-only, mirror topics that reflect the data in original (source) topics are the building blocks of Cluster Linking. For a deep dive on this specialized type of topic and how it works, see Mirror Topics.

Commands and prerequisites¶

The destination cluster can use the confluent kafka link command to create a link from the source cluster.

The following prerequisite steps are needed to run the tutorials during the Preview.

To try out Cluster Linking on Confluent Cloud:

Install Confluent Cloud if you do not already have it, as described in see Install Confluent CLI.

- To learn more about Confluent Cloud in general, see Quick Start for Confluent Cloud.

Log on to Confluent Cloud.

Update your Confluent CLI to be sure you have an up-to-date version of the Cluster Linking commands. See Get the latest version of the Confluent CLI in the quick start for details.

The

confluent kafka linkcommand has the following subcommands or flags.Command Description createCreate a new cluster link. deleteDelete a previously created cluster link. describeDescribes an existing cluster link. listLists existing cluster links. updateUpdates a property for an existing cluster link. The

confluent kafka mirrorcommand has the following subcommands or flags.Command Description describeDescribe a mirror topic. failoverFailover the mirror topics. listList all mirror topics in the cluster or under the given cluster link. pausePause the mirror topics. promotePromote the mirror topics. resumeUpdates a property for an existing cluster link. Follow the tutorials to try out Cluster Linking. The commands are demo’ed in the tutorials.

CLI tips¶

A list of Confluent CLI commands is available here. Following are some generic strategies for saving time on command line workflows.

Save command output to a text file¶

To keep track of information, save the output of the Confluent Cloud commands to a text file. If you do so, be sure to safeguard API keys and secrets afterwards by deleting the file or moving only the security codes to safer storage. To redirect command output to a file, you can use either of these methods and manually add in headings for organization:

- To redirect output to a file, use Linux syntax such as

<command> > notes.txtto run the first command and create the notes file, and then<command> >> notes.txtto append further output. - To send output to a file and also view it on-screen (recommended), use

<command> | tee notes.txtto run the first command and create the file. Thereafter, use theteecommand with the-aflag to append; for example,<command> | tee -a notes.txt.

Use configuration files to store data you will use in commands¶

Create configuration files to store API keys and secrets, detailed configurations on cluster links, or security credentials for clusters external to Confluent Cloud. Examples of this are provided in (Usually optional) Use a config File in the topic data sharing tutorial and in Create the cluster link for the disaster recovery tutorial.

Use environment variables to store resource information¶

You can streamline your command line workflows by saving permissions and cluster data in shell environment variables. Save API keys and secrets, resources such as IDs for environments, clusters, or service accounts, and bootstrap servers, then use the variables in Confluent commands.

For example, create variables for an environment and clusters:

export CLINK_ENV=env-abc123

export USA_EAST=lkc-qxxw7

export USA_WEST=lkc-1xx66

Then use these in commands:

$ confluent environment use $CLINK_ENV

Now using "env-abc123" as the default (active) environment.

$ confluent kafka cluster use $USA_EAST

Set Kafka cluster "lkc-qxxw7" as the active cluster for environment "env-abc123".

Put it all together in commands¶

Assuming you’ve created environment variables for your clusters, API keys, and secrets,

and have cluster link configuration details in a file called link.config,

here is an example of creating a cluster link named “east-west-link” using variables and your configuration file.

confluent kafka link create east-west-link \

--cluster $DESTINATION_ID \

--source-cluster $ORIG_ID \

--source-bootstrap-server $ORIG_BOOT \

--config link.config

Scaling Cluster Linking¶

Because Cluster Linking fetches data from source topics, the first scaling unit to inspect is the number of partitions in the source topics. Having enough partitions lets Cluster Linking mirror data in parallel. Having too few partitions can make Cluster Linking bottleneck on partitions that are more heavily used.

In Confluent Cloud, Cluster Linking scales with the ingress and egress quotas of your cluster. Cluster Linking is able to use all remaining bandwidth in a cluster’s throughput quota: 150 MB/s per CKU egress on a Confluent Cloud source cluster or 50 MB/s per CKU ingress on a Confluent Cloud destination cluster, whichever is hit first. Therefore, to scale Cluster Linking throughput, simply adjust the number of CKUs on either the source, the destination, or both.

Note

On the destination cluster, Cluster Linking write takes lower priority than Kafka clients producing to that cluster; Cluster Linking will be throttled first.

Confluent proactively monitors all cluster links in Confluent Cloud and will perform tuning when necessary. If you find that your cluster link is not hitting these limits even after a full day of sustained traffic, contact Confluent Support.

To learn more, see recommended guidelines for Confluent Cloud.

In a Confluent Platform or Apache Kafka® cluster, you can scale Cluster Linking throughput as follows:

- On the cluster link configurations, change the number of fetcher threads or change the fetch size to get better batching. (See Configuration options on the cluster link for Confluent Platform.)

- Improve the cluster’s maximum throughput by scaling the brokers vertically or horizontally.

- Use the options listed under Cluster Link Replication Configurations to tune cluster link performance, which helps scale cluster link throughput.

Limitations¶

This section details support and known limitations in terms of cluster types, cluster management, and performance.

Cluster types and networking¶

- Currently supported cluster types are described in Supported cluster types.

- A given cluster can only be the destination for 10 cluster links. Cluster Linking does not currently support by default aggregating data from more than 10 sources. The limit can be raised significantly for these aggregation use cases. To inquire about raising the cluster link limit to a single cluster for an aggregation use case, contact Confluent Support.

Kafka transactions such as “exactly once” semantics not supported on mirror topics¶

Cluster Linking is not integrated with Kafka transactions, including “exactly once” semantics. Using Cluster Linking to mirror topics that contain transactions or exactly once semantics is not supported and not recommended.

Security¶

- Cluster links on Confluent Cloud that use OAuth must be created using either the Confluent CLI or REST API. Creating cluster links that use OAuth is not currently supported in Terraform or in the Confluent Cloud Console.

- To learn more, see information about the Security model for Cluster Linking.

ACL syncing¶

A key feature of Cluster Linking is the capability to sync ACLs between clusters. This is useful when moving clients between clusters for a migration or failover.

- In Confluent Cloud, ACL sync is only supported between two Confluent Cloud clusters that belong to the same Confluent Cloud organization. ACL sync is not supported between two Confluent Cloud clusters in different organizations, or between a Confluent Platform and a Confluent Cloud cluster. This is because the principals used in Confluent Cloud are service accounts unique to one Confluent Cloud organization.

- Do not include in the sync filter ACLs that are managed independently on the destination cluster. This is to prevent cluster link migration from deleting ACLs that were added specifically on the destination and should not be deleted. To learn more, see Configuring cluster link behavior and Syncing ACLs from source to destination cluster.

Terraform¶

If using Terraform to create and manage a cluster link, Terraform can only create and manage cluster links that are between two Confluent Cloud clusters. It cannot create or manage cluster links to or from an external cluster, such as an open-source Apache Kafka® cluster or a Confluent Platform cluster.

Management limitations¶

- Cluster links must be created and managed on the destination cluster.

- Cluster links can only be created with destination clusters that are Dedicated Confluent Cloud clusters.

- In Confluent Platform 7.1.0 and later, REST API calls to list and get source-initiated cluster links will have their destination cluster IDs

returned under the parameter

source_cluster_id. - Mirror topics count against a cluster’s topic limits, partition limits, and/or storage limits; just like other topics.

- There is no limit to the number of topics or partitions a cluster link can have, up to the destination cluster’s maximum number of topics and partitions.

- A cluster can have at most 10 cluster links targeting it as the destination; that is, not more than 10 cluster links that are replicating data to it. Some aggregation use cases may require more than 10 cluster links on one destination cluster. The limit can be raised significantly for these aggregation use cases. To inquire about raising the cluster link limit to a single cluster for an aggregation use case, contact Confluent Support.

- By definition, a mirror topic can only have one cluster link and one source topic replicating data to it. Alternatively, a single topic can be the source topic for an unlimited number of mirror topics.

- When deleting a cluster link, first check that all mirror topics are in the

STOPPEDstate. If any are in thePENDING_STOPPEDstate, deleting a cluster link can cause irrecoverable errors on those mirror topics due to a temporary limitation. - The frequency of sync processes for consumer group offset sync, ACL sync, and topic configuration sync are user-configurable. The frequency with which these syncs can occur is limited to at most once per second (that is, 1000 ms, since the setting is in milliseconds). You can set these syncs to occur less frequently, but no more frequent than 1000 ms.

Feature support and permissions requirements for Cloud Console¶

- You must be a CloudClusterAdmin, EnvironmentAdmin, or OrganizationAdmin in order to create a cluster link and/or mirror topics in the Confluent Cloud Console. Using the UI without these roles will yield a permissions error when attempting to create a cluster link. To learn more, see Manage Security for Cluster Linking on Confluent Cloud.

- You must be an OrganizationAdmin to use the first source-cluster option: “Confluent Cloud (in my org)” because you must be authorized to create a Service Account. Using that option without the OrganizationAdmin role will yield a permissions error when attempting to create a cluster link. To learn more, see Manage Security for Cluster Linking on Confluent Cloud.

Performance limits¶

- Throughput

For Cluster Linking, throughput indicates bytes-per-second of data replication. The following performance factors and limitations apply.

- Cluster Linking throughput (bytes-per-second of

data replication) counts towards the destination cluster’s produce limits (also known

as “ingress” or “write” limits). However, production from Kafka clients is prioritized

over Cluster Linking writes; therefore, these are exposed as separate metrics

in the Metrics API: Kafka client writes are

received_bytesand Cluster Linking writes arecluster_link_destination_response_bytes - Cluster Linking consumes from the source cluster similar to Kafka consumers.

Throughput (bytes-per-second of data replication) is treated the same as consumer

throughput. Cluster Linking will contribute to any quotas and hard or soft

limits on your source cluster. The Kafka client reads and Cluster Linking reads

are therefore included in the same metric in the Metrics API:

sent_bytes - Cluster Linking is able to max out the throughput of your CKUs. The physical distance between clusters is a factor of Cluster Linking performance. Confluent monitors cluster links and optimizes their performance. Unlike Replicator and Kafka MirrorMaker 2, Cluster Linking does not have a unique scaling (that is, tasks). You do not need to scale up or scale down your cluster links to increase performance.

- Cluster Linking throughput (bytes-per-second of

data replication) counts towards the destination cluster’s produce limits (also known

as “ingress” or “write” limits). However, production from Kafka clients is prioritized

over Cluster Linking writes; therefore, these are exposed as separate metrics

in the Metrics API: Kafka client writes are

- Connections

- Cluster Linking connections count towards any connection limits on your clusters.

- Request rate

- Cluster Linking contributes requests which count towards your source cluster’s request rate limits.

Tip

See more Q&A at Frequently Asked Questions for Cluster Linking on Confluent Cloud.

Known issues¶

Considerations for deleting source topics¶

Do not delete a source topic for an active mirror topic, as it can cause issues with Cluster Linking. Instead, follow these steps as a best practice:

- Use the

promoteorfailovercommands to stop or delete any active mirror topics that read from the source topic you want to delete. - Then, you can safely delete the source topic.

To learn more, see Source Topic Deletion in Mirror Topics.

On source-initiated links, a Confluent Platform source cluster that links to a KRaft destination must be 7.1.0 or later¶

Cluster Linking with a source-initiated link between a source cluster running Confluent Platform 7.0.x or earlier

(non-KRaft) and a destination cluster running in KRaft mode is not supported.

Link creation may succeed, but the connection will ultimately fail (with a

SOURCE_UNAVAILABLE error message). To work around this issue, make sure the

source cluster is running Confluent Platform version 7.1.0 or later. If you have links from a

Confluent Platform source cluster to a Confluent Cloud destination cluster, you must upgrade your

source clusters to Confluent Platform 7.1.0 or later to avoid this issue.

This limitation only applies to source-initiated links. A regular cluster link can be created between a KRaft destination cluster and non-KRaft source cluster, as long as the destination cluster can connect to the source cluster.