Import and Export a Pipeline for Stream Designer on Confluent Cloud¶

Important

On February 28, 2025, Stream Designer will be deprecated and removed from Confluent Cloud. Support for Stream Designer will end on February 28, 2025.

If you are using Stream Designer currently, consider migrating your queries to a Confluent Cloud for Apache Flink® workspace.

Stream Designer enables exporting a pipeline to a SQL file and importing it into another pipeline.

Step 1: Create a pipeline project¶

A Stream Designer pipeline project defines all the components that are deployed for an application. In this step, you create a pipeline project and a canvas for designing the component graph.

Log in to the Confluent Cloud Console and open the Cluster Overview page for the cluster you want to use for creating pipelines.

In the navigation menu, click Stream Designer.

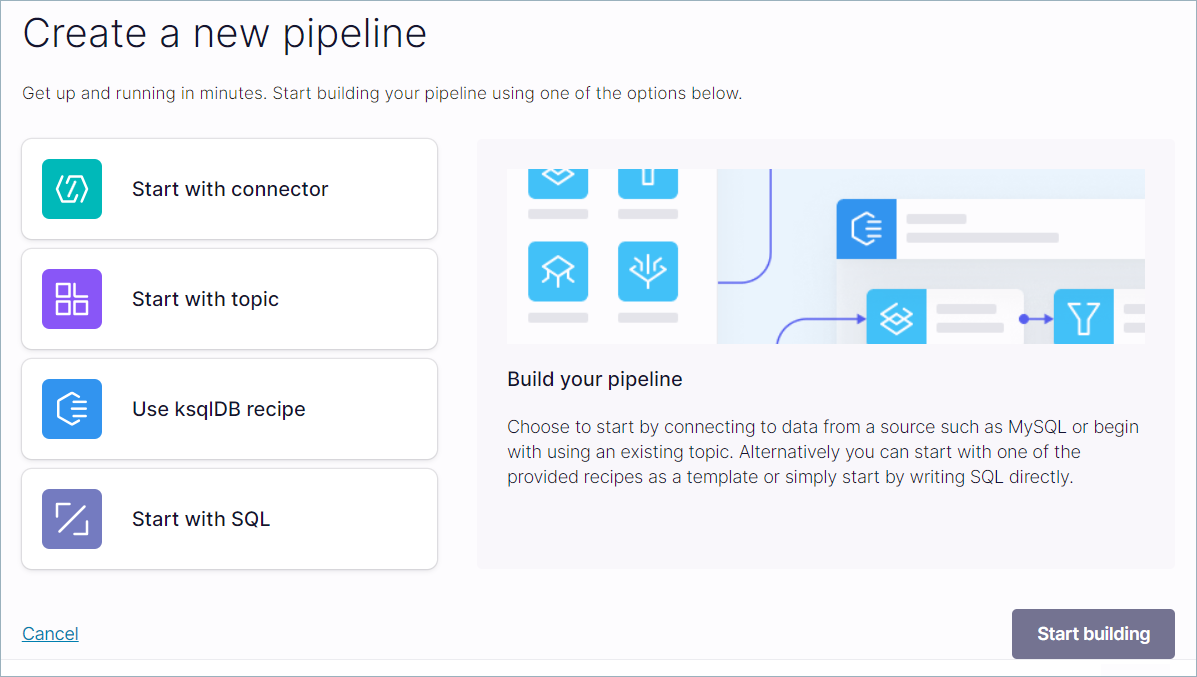

Click Create pipeline.

The Create a new pipeline page opens.

Step 2: Create a table definition¶

In this step, you start creating your pipeline by using a CREATE TABLE

statement. The statement includes the schema definition for a users

table that has the following columns.

ID STRING PRIMARY KEY

REGISTERTIME BIGINT

USERID STRING

REGIONID STRING

GENDER STRING

Click Start with SQL and click Start building.

The source code editor opens.

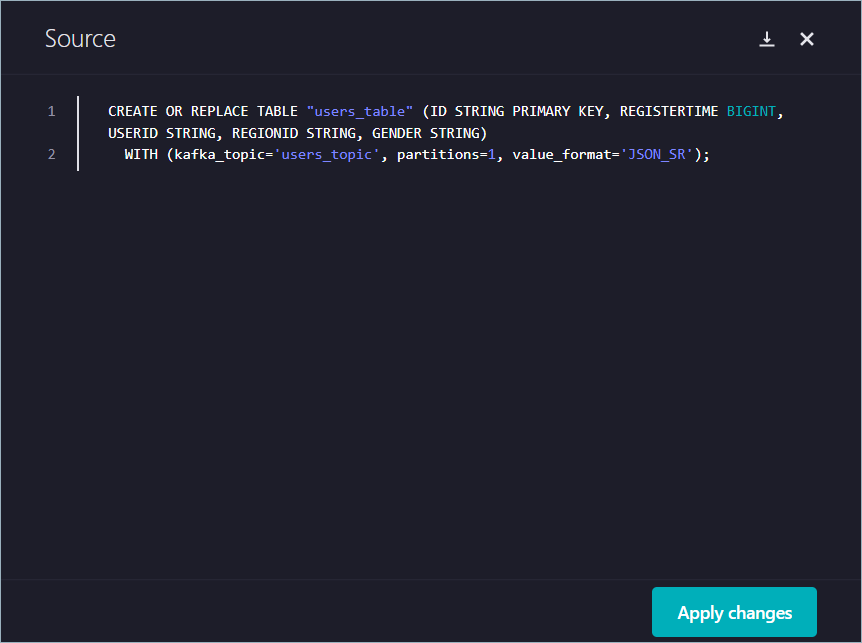

Copy the following code into the source code editor.

CREATE OR REPLACE TABLE "users_table" (ID STRING PRIMARY KEY, REGISTERTIME BIGINT, USERID STRING, REGIONID STRING, GENDER STRING) WITH (kafka_topic='users_topic', partitions=1, value_format='JSON_SR');

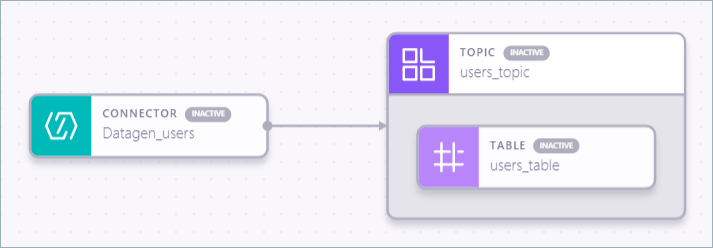

Your output should resemble:

Click Apply changes.

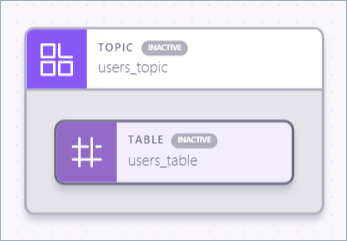

Topic and Table components appear on the canvas.

In the source code editor, click X to dismiss the code view.

Step 2: Create a connector for the topic¶

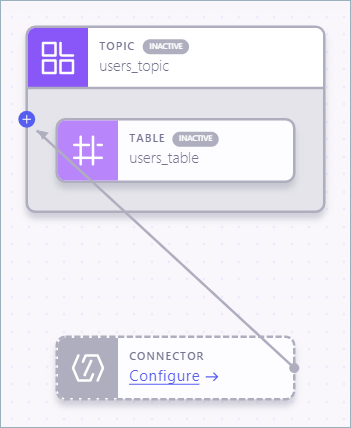

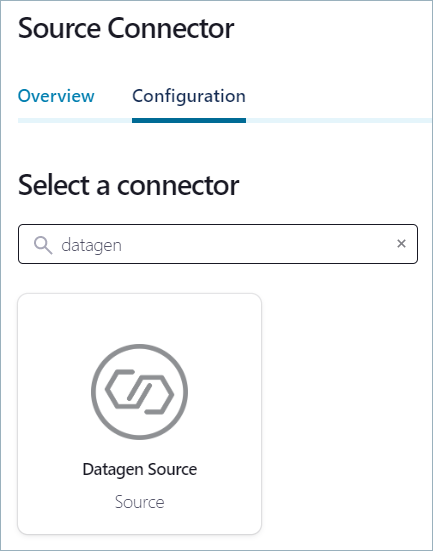

In the Components menu, click Source Connector.

Hover over the Datagen connector component, click + and drag the arrow to the Topic icon.

Step 3: Configure the connector¶

In the Source Connector component, click Configure.

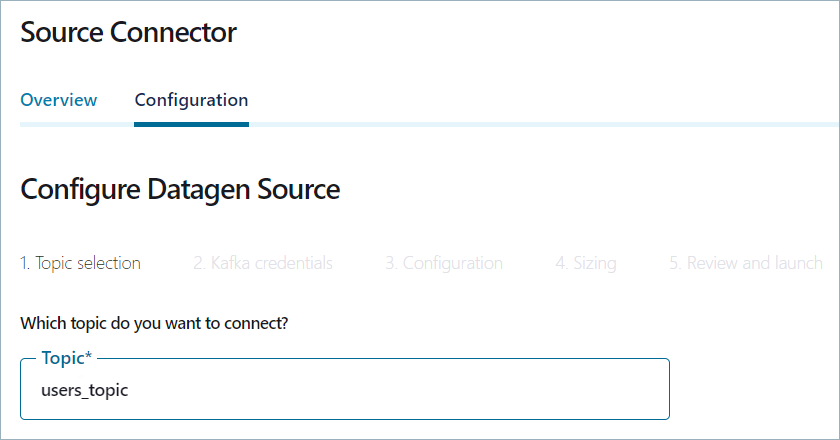

The Source Connector page opens.

In the search box, enter “datagen”.

Click the Datagen Source tile to open the Configuration page.

In the Topic textbox, type “users_topic”.

Click Continue.

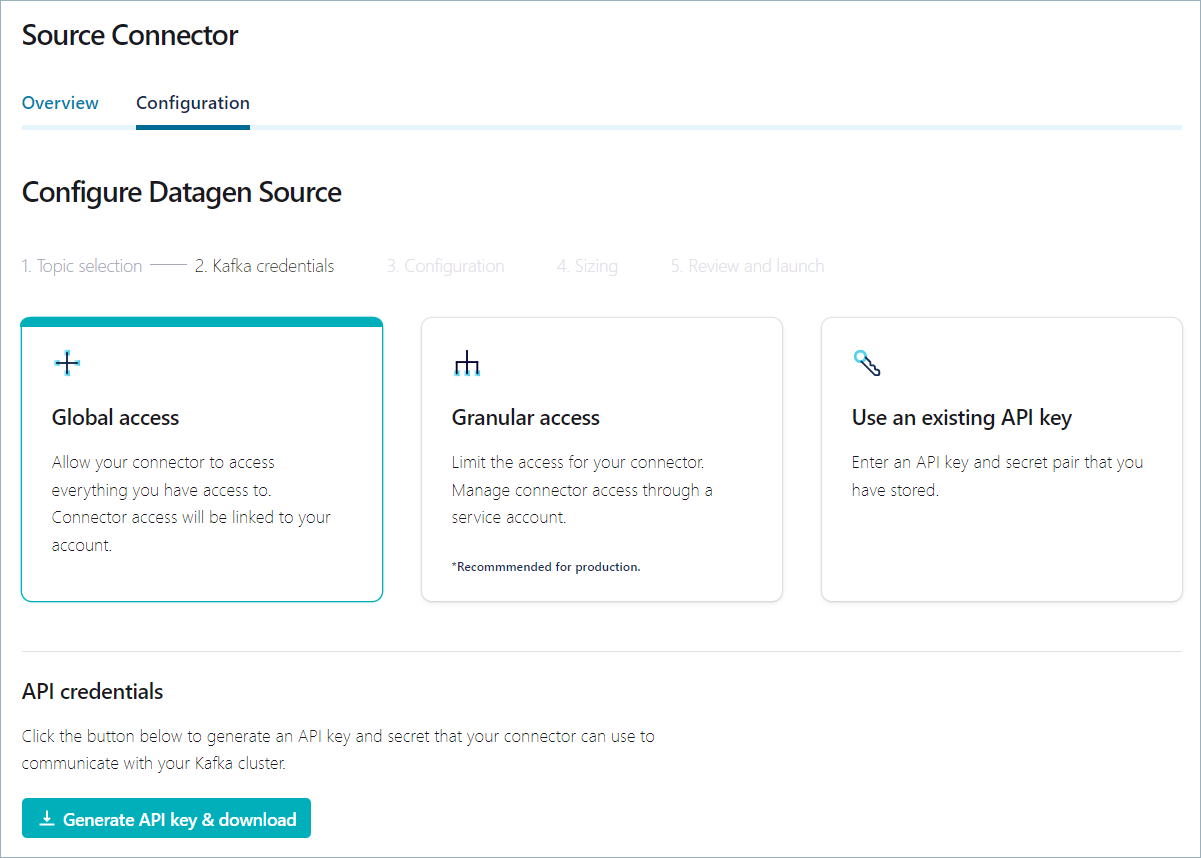

The Kafka credentials page opens.

Ensure that the the Global access tile is selected and click Generate API key & download to create the API key for the Datagen connector.

A text file containing the newly generated API key and secret is downloaded to your local machine.

Click Continue.

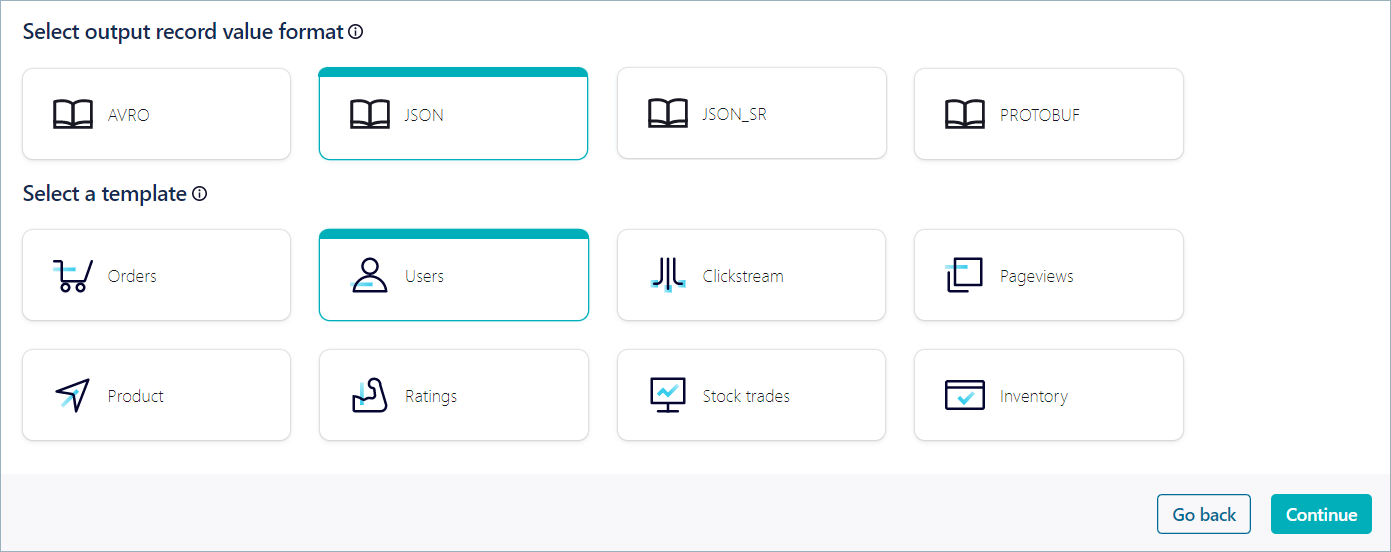

In the Select output record value format section, click JSON, and in the Select a template section, click Users.

Click Continue.

In the Connector sizing section, leave the minimum number of tasks at

1and click Continue.In the Connector name textbox, enter “Datagen_users” and click Continue.

The Datagen source connector is configured and appears on the canvas with a corresponding topic component. The topic component is configured with the name you provided during connector configuration.

You have a Datagen Source connector ready to produce mock user data to

a Kafka topic named users_topic with a table registered on the topic.

Step 4: Export the pipeline definition¶

In this step, you export the SQL code that defines your pipeline to a file on yout local machine.

Step 5: Import the pipeline code¶

In this step, you delete the pipeline components from the canvas and import the pipeline definition that you saved earlier.

Right-click on the Connector component and click Remove.

Right-click on the Topic component and click Remove.

The canvas resets to the Create a new pipeline page.

Click Start with SQL and Start building.

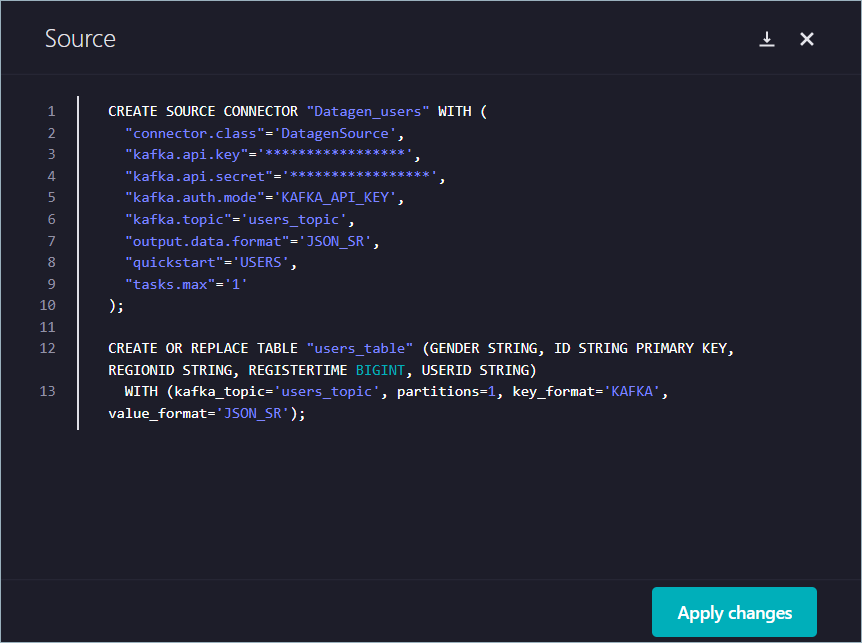

In the source code editor, paste the contents of the SQL file that you downloaded earlier.

From the saved API key file, copy the API key and secret into the corresponding

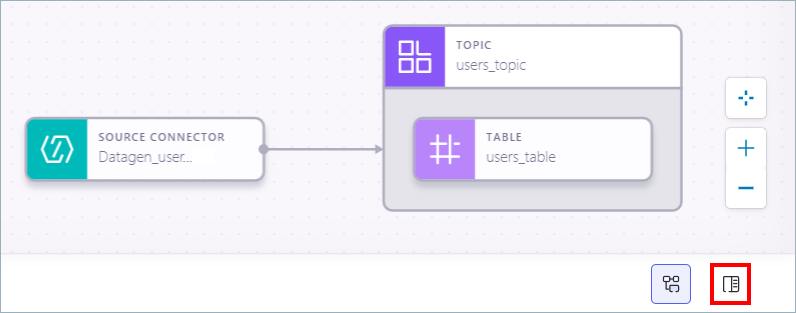

kafka.api.keyandkafka.api.secretfields of the CREATE SOURCE CONNECTOR statement.Your output should resemble:

CREATE SOURCE CONNECTOR "Datagen_users" WITH ( "connector.class"='DatagenSource', "kafka.api.key"='<your-api-key>', "kafka.api.secret"='<your-api-secret>', "kafka.auth.mode"='KAFKA_API_KEY', "kafka.topic"='users_topic', "output.data.format"='JSON_SR', "quickstart"='USERS', "tasks.max"='1' ); CREATE OR REPLACE TABLE "users_table" (GENDER STRING, ID STRING PRIMARY KEY, REGIONID STRING, REGISTERTIME BIGINT, USERID STRING) WITH (kafka_topic='users_topic', partitions=1, key_format='KAFKA', value_format='JSON_SR');

Click Apply changes.

The Datagen Source connector and Topic components are imported and appear on the canvas. Your pipeline is ready to activate.

Note

When you import code, the canvas resets and all existing components are deleted.

In the source code editor, click X to dismiss it.

The Connector and Topic components appear on the canvas.

Step 6: Activate the pipeline¶

In this step, you enable security for the pipeline and activate it.

Click Activate to deploy the pipeline components.

The Pipeline activation dialog opens.

In the ksqlDB Cluster dropdown, select the ksqlDB cluster to use for your pipeline logic.

Note

If you don’t have a ksqlDB cluster yet, click Create new ksqlDB cluster to open the ksqlDB Cluster page and then click Add cluster. When you’ve finished creating the ksqlDB cluster, return to the Create new pipeline dialog and click the refresh button to see your new ksqlDB cluster in the dropdown.

In the Activation privileges section, click Grant privileges.

Click Confirm to activate your pipeline.

After a few seconds, the state of each component goes from Activating to Activated.

Note

If the filter component reports an error like

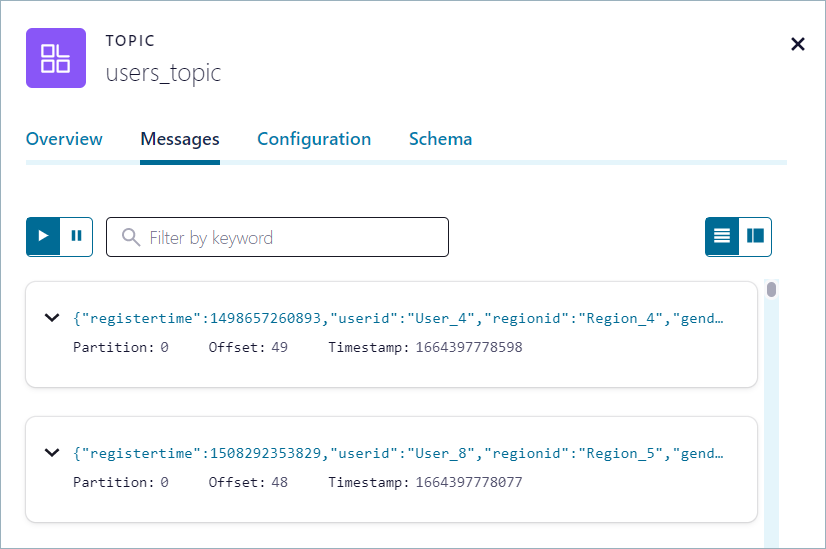

Did not find any value schema for the topic, wait for the Datagen source connector to provision completely and activate the pipeline again.Click the Topic component, and in the details page, click Messages to confirm that the Datagen Source connector is producing messages to the topic.

Your output should resemble the following.

Step 7: Deactivate the pipeline¶

To avoid incurring costs, click Deactivate pipeline to delete all resources created by the pipeline.

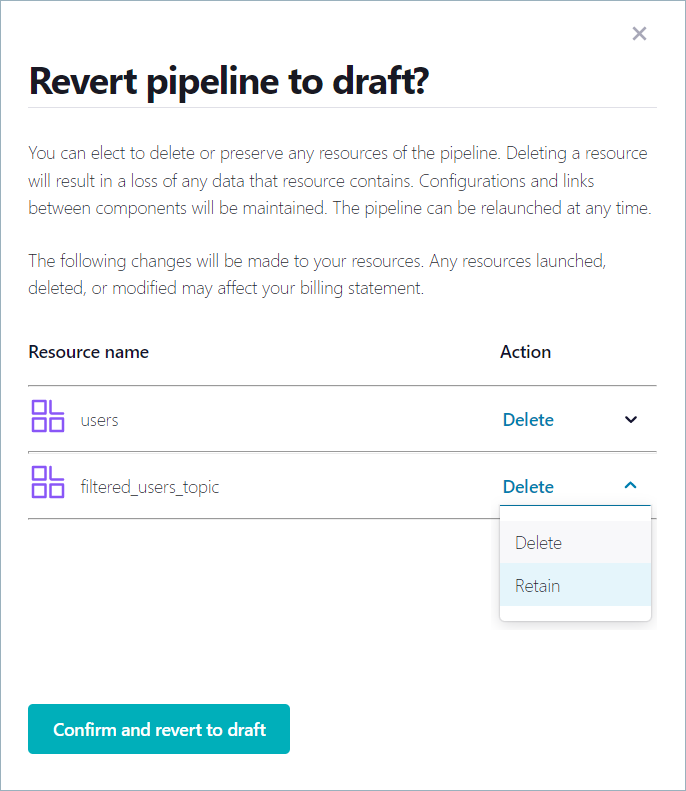

When you deactivate a pipeline, you have the option of retaining or deleting topics in the pipeline.

-

The Pipeline Settings dialog opens.

Click Deactivate pipeline to delete all resources created by the pipeline.

The Revert pipeline to draft? dialog appears. Click the dropdowns to delete or retain the listed topics. For this example, keep the Delete settings.

Click Confirm and revert to draft to deactivate the pipeline and delete topics.

Step 8: Delete the pipeline¶

When all components have completed deactivation, you can delete the pipeline safely.

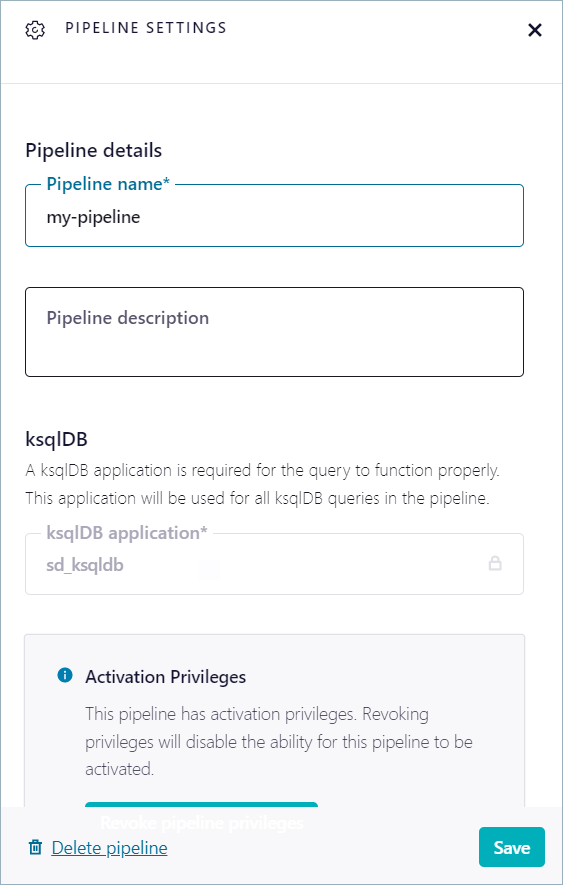

Click the settings icon.

The Pipeline Settings dialog opens.

Click Delete pipeline. In the Delete pipeline dialog, enter “confirm” and click Confirm.

The pipeline and associated resources are deleted. You are returned to the Pipelines list.