Manage Pipeline Secrets for Stream Designer on Confluent Cloud¶

Important

On February 28, 2025, Stream Designer will be deprecated and removed from Confluent Cloud. Support for Stream Designer will end on February 28, 2025.

If you are using Stream Designer currently, consider migrating your queries to a Confluent Cloud for Apache Flink® workspace.

Many pipelines require handling secrets, like Kafka cluster API keys and connector credentials. Stream Designer enables managing secrets safely and securely in the Confluent Cloud Console, the Confluent CLI and the Pipelines REST API.

The following steps show how to create a pipeline that has a Kafka cluster API key and how to manage the secret by using the Confluent Cloud Console, the Confluent CLI, and the Confluent Cloud REST API.

Step 1: Create a pipeline project¶

A Stream Designer pipeline project defines all the components that are deployed for an application. In this step, you create a pipeline project and a canvas for designing the component graph.

Log in to the Confluent Cloud Console and open the Cluster Overview page for the cluster you want to use for creating pipelines.

In the navigation menu, click Stream Designer.

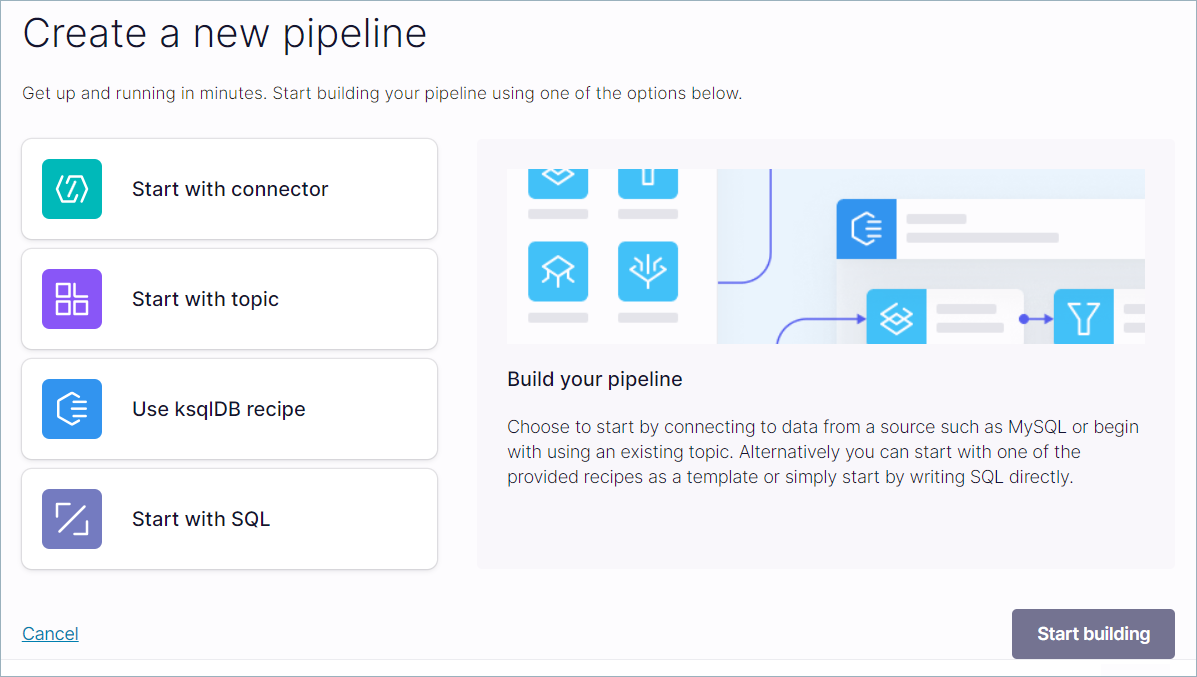

Click Create pipeline.

The Create a new pipeline page opens.

Step 2: Create a connector definition¶

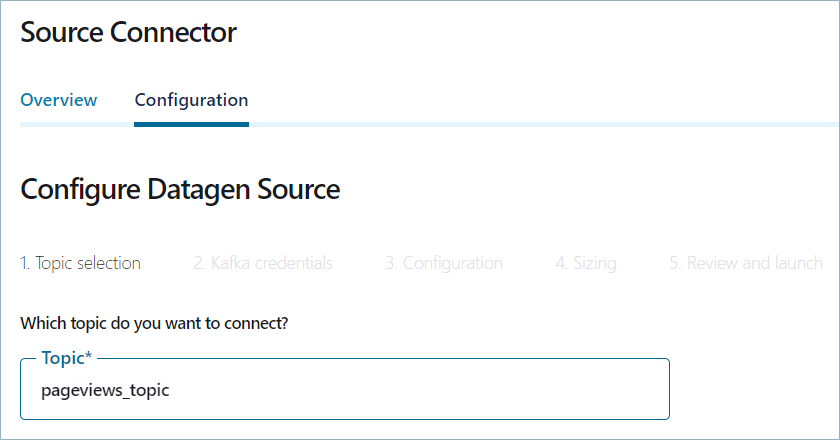

Your pipeline starts with data produced by the Datagen source connector. In this step, you create a pipeline definition for a connector that produces mock pageviews data to a Kafka topic.

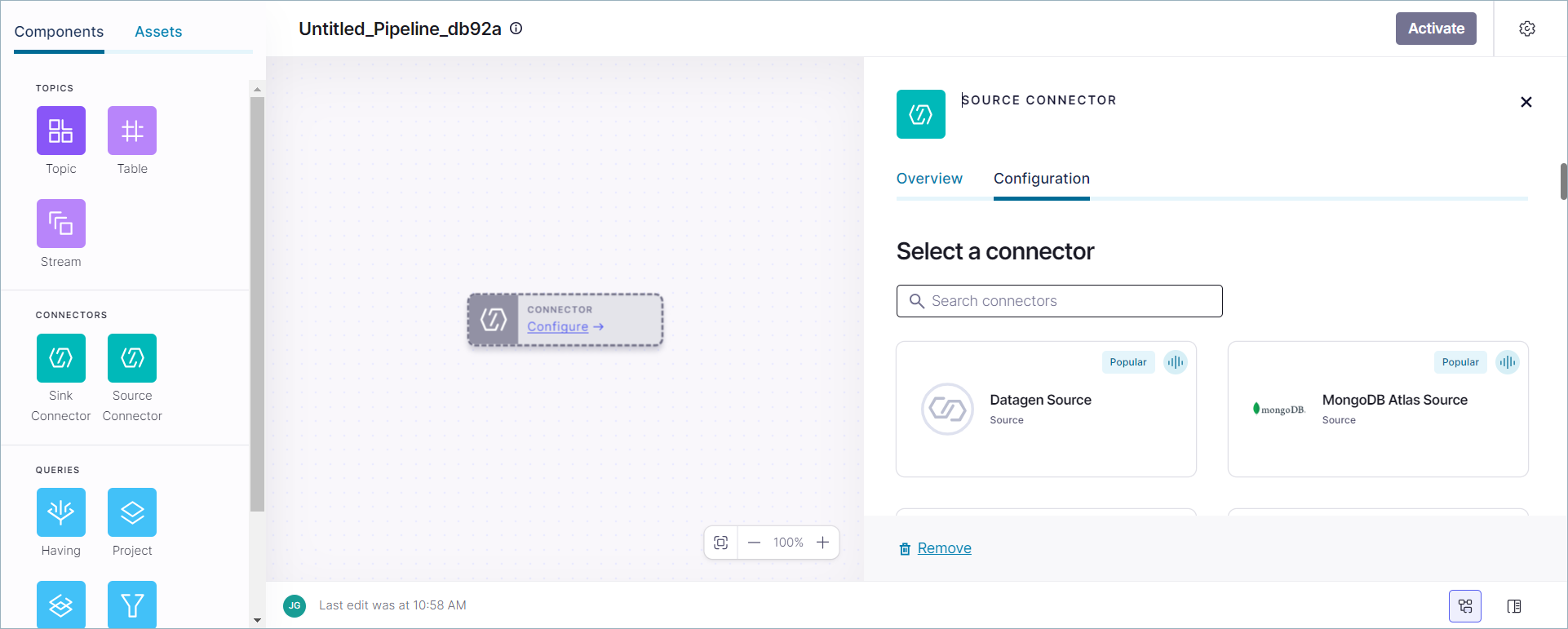

Click Start with connector and Start building.

The Stream Designer canvas opens with a Source Connector component.

Click Start with Connector and then click Start building.

The Stream Designer canvas opens, with the Source Connector details view visible.

In the Source Connector page click the Datagen Source tile to open the Configuration page.

In the Topic textbox, type “pageviews_topic”.

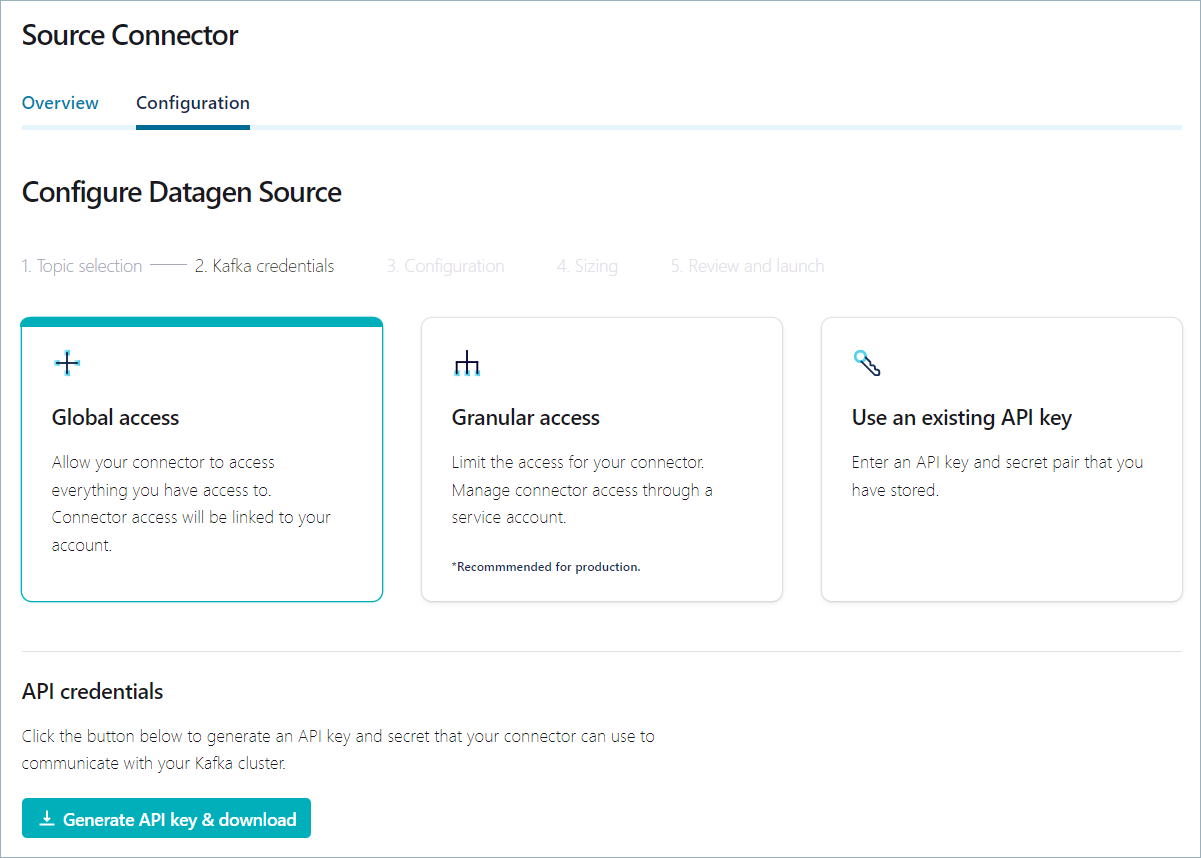

Click Continue to open Kafka credentials page.

Ensure that the the Global access tile is selected and click Generate API key & download to create the API key for the Datagen connector.

A text file containing the newly generated API key and secret is downloaded to your local machine.

Click Continue to configure the connector’s output.

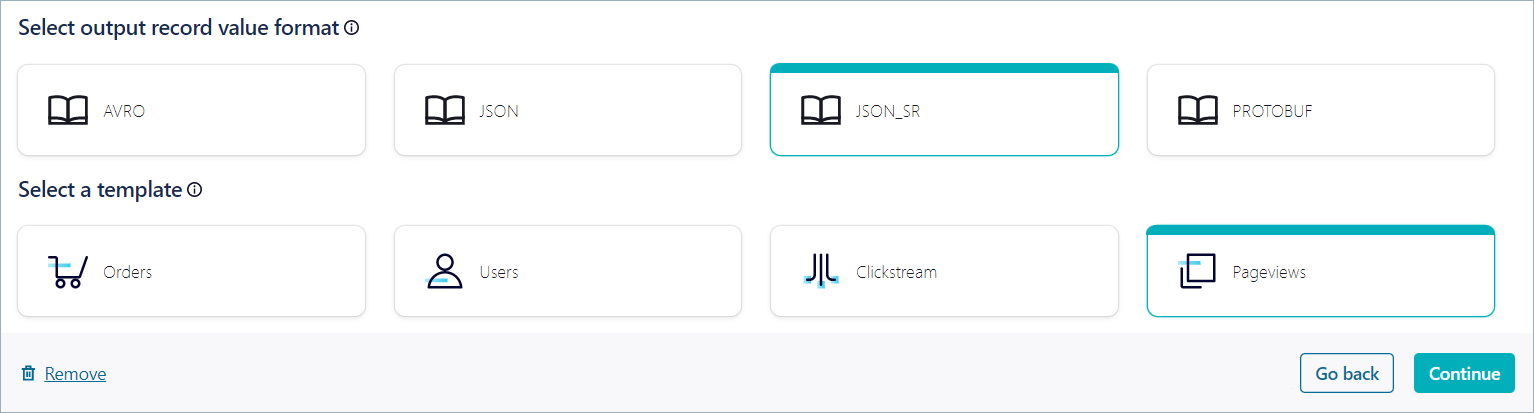

In the Select output record value format section, click JSON_SR, and in the Select a template section, click Pageviews.

Click Continue to open the Sizing page.

In the Connector sizing section, leave the minimum number of tasks at

1.Click Continue to open the Review and launch page.

In the Connector name textbox, enter “Datagen_pageviews” and click Continue.

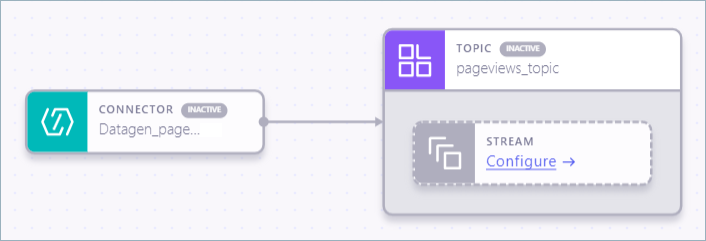

The Datagen source connector is configured and appears on the canvas with a corresponding topic component. The topic component is configured with the name you provided during connector configuration. Also, a stream is registered on the topic.

In the Topic component, right-click the stream component and select Remove.

Step 3: Manage pipeline secrets¶

Stream Designer enables managing pipeline secrets by using the Confluent Cloud Console, the

Confluent CLI, and the Confluent Cloud REST API. Stream Designer automatically detects raw

credentials in connector configuration PASSWORD-type properties and creates

a pipeline secret and replaces the value with a variable reference to that

secret. Also, Stream Designer detects when connector configuration PASSWORD-type

properties already have a variable reference and uses that secret.

Do not use variable references in connector configuration properties that

have a type other than PASSWORD. Such uses are always treated as literals

and not as variable references.

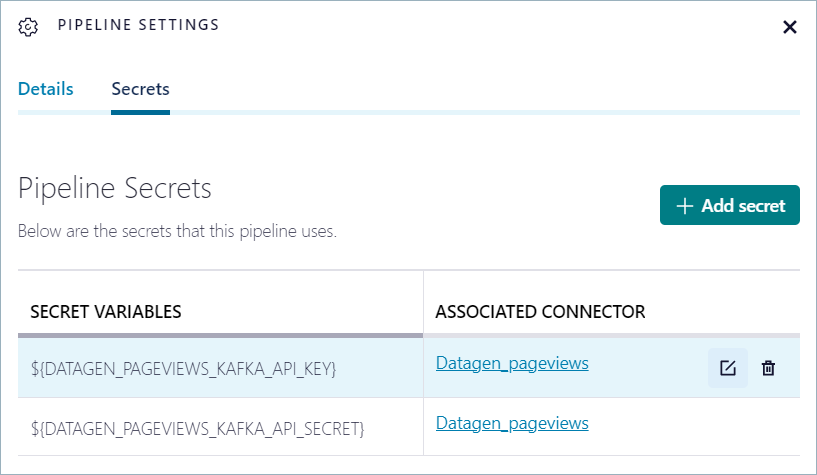

Manage pipeline secrets in Confluent Cloud Console¶

When you created the pipeline, you generated an API key for the Kafka cluster that hosts the pipeline. Stream Designer saves secrets like API keys automatically in the credential store.

Click the View source code icon (

).

).Your output should resemble:

CREATE SOURCE CONNECTOR "Datagen_pageviews" WITH ( "connector.class"='DatagenSource', "kafka.api.key"='${DATAGEN_PAGEVIEWS_KAFKA_API_KEY}', "kafka.api.secret"='${DATAGEN_PAGEVIEWS_KAFKA_API_SECRET}', "kafka.auth.mode"='KAFKA_API_KEY', "kafka.topic"='pageviews_topic', "output.data.format"='JSON_SR', "quickstart"='PAGEVIEWS', "tasks.max"='1' );

Stream Designer has replaced the values of

kafka.api.keyandkafka.api.secretwith the named variablesDATAGEN_PAGEVIEWS_KAFKA_API_KEYandDATAGEN_PAGEVIEWS_KAFKA_API_SECRET, based on the connector’s name.Click the Pipeline settings icon (

).

).The Pipeline settings panel opens.

Click Secrets to view a list of the secrets that are used by the pipeline.

The connector that’s associated with the secret is shown. Hover over the connector to edit or delete the associated API key.

Note

Secret values are never displayed in Confluent Cloud Console.

Manage secrets in Confluent CLI¶

Use the --secret option to modify pipeline secrets by using the

Confluent CLI. Include one --secret option for each secret you want to

add, change, or remove. Any secret that is not modified is left unchanged.

Important

Valid secret names consist of 1 to 128 lowercase letters, uppercase letters,

digits, and the underscore (_) character, but not beginning with a digit.

The following command shows how to update the API key and secret for the

Datagen_pageviews connector that you created earlier. The updated key and

value for the secret are in the API_KEY and API_SECRET environment

variables.

confluent pipeline update ${PIPE_ID} \

--secret DATAGEN_PAGEVIEWS_KAFKA_API_KEY=${API_KEY} \

--secret DATAGEN_PAGEVIEWS_KAFKA_API_SECRET=${API_SECRET}

Embedded quotation marks¶

Any quotation mark characters (" and ') within a secret value must

be escaped, or the shell handles them as quotes and sends only values

between them to the CLI. Values may contain embedded spaces.

The following examples show valid assignments that use quotations marks and other characters.

--secret name1=value-with,and=

--secret name2="value-with\\"and'"

--secret name4="abc"

--secret name5='abc'

--secret name7="secret with space"

Escape any quotes that you want included in the secret value, for example:

--secret name6=\\"abc\\'

Unescaped quotes are used to concatenate substrings, so the following

example evaluates to a secret value of abcdef:

--secret name8=abc"def"

To remove a secret, assign an empty or blank secret value, for example:

--secret name3=

Manage secrets with Confluent Cloud REST API¶

The Pipelines REST API enables managing Stream Designer pipelines programmatically. For more information, see Manage Pipelines With the REST API for Stream Designer on Confluent Cloud.

Run the following command to get the secret names for a specific pipeline. The jq query selects the

.spec.secretsproperty from the json response. Ensure that the environment variables are assigned with your values forpipeline-id,env-id, andkafka-cluster-id.curl -s -H "Authorization: Bearer $TOKEN" \ -X GET "https://api.confluent.cloud/sd/v1/pipelines/${PIPE_ID}?environment=${ENV_ID}&spec.kafka_cluster=${CLUSTER_ID}" | \ jq .spec.secrets

Your output should resemble:

{ "DATAGEN_PAGEVIEWS_KAFKA_API_KEY": "*****************", "DATAGEN_PAGEVIEWS_KAFKA_API_SECRET": "*****************" }

Note

Secret values are never displayed in REST API responses.

Update the secret by using a PATCH request. Create a file named

${PIPE_ID}-patch.jsonand copy the following json into the file. Replace<env-id>and<kafka-cluster-id>with your environment and Kafka cluster ID values. Replace<kafka-cluster-api-key>and<kafka-cluster-api-secret>with your updated Kafka cluster API key and secret values.{ "spec": { "environment": { "id": "<env-id>" }, "kafka_cluster": { "id": "<kafka-cluster-id>" }, "secrets": { "DATAGEN_PAGEVIEWS_KAFKA_API_KEY": "<kafka-cluster-api-key>", "DATAGEN_PAGEVIEWS_KAFKA_API_SECRET": "<kafka-cluster-api-secret>" } }

Run the following command to patch the pipeline with the updated secret.

curl -s -H "Authorization: Bearer $TOKEN" \ -X PATCH -H "Content-Type: application/json" \ -d @${PIPE_ID}-patch.json \ "https://api.confluent.cloud/sd/v1/pipelines/${PIPE_ID}?environment=${ENV_ID}&spec.kafka_cluster=${CLUSTER_ID}" | jq .

How Stream Designer stores secrets¶

You can reference secrets within the source code of a pipeline but only as the

value in PASSWORD-type configuration properties in CREATE SOURCE CONNECTOR

and CREATE SINK CONNECTOR statements.

The following SQL shows an example CREATE SOURCE CONNECTOR statement that has the Kafka cluster API key and secret and a password for a Postgres source connector.

CREATE SOURCE CONNECTOR IF NOT EXISTS recipe_postgres_discount_codes WITH (

'connector.class' = 'PostgresSource',

'kafka.api.key' = '${PG_CONNECTOR_API_KEY}',

'kafka.api.secret' = '${PG_CONNECTOR_API_SECRET}',

'connection.host' = 'mydatabase.abc123ecs2.us-west.rds.amazonaws.com',

'connection.port' = '5432',

'connection.user' = 'postgres',

'connection.password' = '${PG_CONNECTOR_PASSWORD}',

'db.name' = 'flights',

'table.whitelist' = 'discount_codes',

'timestamp.column.name' = 'timestamp',

'output.data.format' = 'JSON',

'db.timezone' = 'UTC',

'tasks.max' = '1'

);

You can use the Stream Designer REST API or the Confluent CLI to create and manage pipelines. You must use the variable references to secrets within the pipeline’s CREATE SOURCE CONNECTOR and CREATE SINK CONNECTOR statements.

Stream Designer automatically extracts the secret for every connector configuration property that is known to be a password.

For example, Confluent Cloud recognizes that the connection.password property of

the Postgres source connector is of type PASSWORD. If your pipeline has a

CREATE SOURCE CONNECTOR statement that includes anything other than a secret

variable reference for the connection.password property, Stream Designer automatically

creates a new secret and uses a variable reference to the secret in the property.

Characters in the connector name and property name that are not allowed in

secret names are converted to _, and the remaining characters are uppercased.

Stream Designer detects literal values like the following:

'connection.password' = 'secret123'

Stream Designer replaces such values with a variable:

'connection.password' = '${<connector_name>_CONNECTION_PASSWORD}'

Stream Designer detects the value of the secret, for example, secret123, encrypts it,

and stores it securely. When the actual connector configuration is needed for

pipeline activation, the ${<connector_name>_CONNECTION_PASSWORD} reference

is resolved with the actual secret from secure storage. The actual secret

is never returned to clients by the REST API, but it is sent to the Connect

API when Stream Designer provisions or updates the connector.

When you view the pipeline source code, all secret values are masked.

Use the --secret option to specify passwords and API keys when you create

a pipeline from source code.

confluent pipeline create --name "Test pipeline" \

--description "Pipeline with secrets" \

--sql-file "<source-code-file>"

--ksql-cluster <ksqldb-cluster-id> \

--secret "<connector_name-1>_CONNECTION_PASSWORD=<api-key-1>" \

--secret "<connector_name-2>_CONNECTION_PASSWORD=<api-key-2>" \

--secret "<connector_name-3>_CONNECTION_PASSWORD=<api-key-3>"